Anatomy of a Conversational Assistant: Understanding the Hidden Architecture Behind AI Interactions

The hidden scaffolding of conversational AI, and why it matters. How vibe coding is reshaping software creation—through intuition, improvisation, and personal need.

Ciao,

Among the many projects currently occupying my days — and occasionally my nights — is the development of a growing portfolio of educational content. Just this week, we launched the second edition of the AI Master Class for Product Managers, created in partnership with Product Heroes.

In this issue of Radical Curiosity, I delve into the hidden architecture of conversational AI — the underlying mechanisms that make interactions with tools like ChatGPT or Claude feel almost human. What appears to be a simple chat interface is, in fact, the product of meticulous engineering: a delicate balance of system prompts, context management, and external integrations that create the illusion of a fluid, intelligent exchange.

Alongside this exploration, I share a reflection on a new wave of creators empowered by vibe coding, a way of building software without writing code in the traditional sense. Sergio’s story is emblematic: starting with a vague prompt and a limited budget, he built a fully operational management system for his company in just two weeks. It now saves his business tens of thousands of euros a year. His experience captures the spirit of this movement: not polished startups aimed at scale, but purpose-driven tools — solutions that are meaningful, useful, and, perhaps most importantly, built with one’s own hands.

Do you have a vibe coding story to share? I’d love to hear it.

Nicola

Table of Contents

Understanding AI - Anatomy of a Conversational Assistant: Understanding the Hidden Architecture Behind AI Interactions

Off the Record - From Commodore 64 to Vibe Coding: A Leap into the Future

Curated Curiosity

Why do language models “hallucinate”?

Defeating Nondeterminism in LLM Inference

Understanding AI

Anatomy of a Conversational Assistant:

Understanding the Hidden Architecture Behind AI Interactions

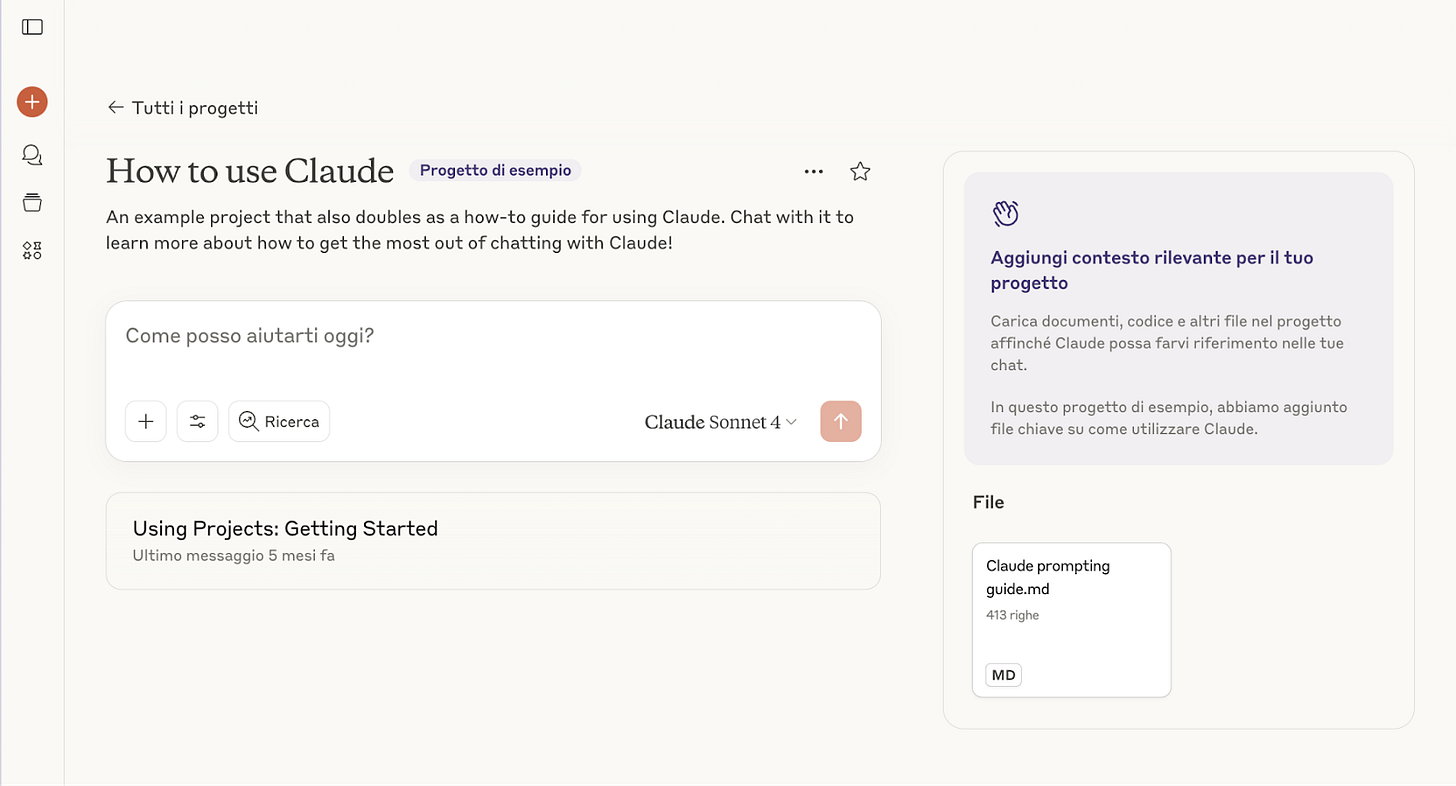

Last week, I explored the functioning of large language models and the principles underlying their operation (How LLMs Work and Why AI Models Matter Less Than We Think). In this follow-up reflection, I shift the focus to their user interfaces and the practical experience of interacting with them.

As I write, conversational assistants all look the same. The interface is that of a typical chat: on the left, a menu listing recent conversations and additional features; on the right, the area where the interaction takes place. The input area includes access to a set of tools that can be activated by clicking the + button: the ability to upload images and documents, and access to other utilities.

Personally, I use the paid versions of OpenAI's ChatGPT and Anthropic's Claude, which grant access to advanced functionalities. There are many others as well—among the most well-known: Google’s Gemini, Perplexity, X’s Grok, Deepseek—but reviewing them all would be unfeasible. In these pages, we will focus exclusively on ChatGPT and Claude, with the aim of offering an overview of how an AI-based assistant functions and understanding how this influences the responses generated by the model.

The conversational assistant acts as a mediator between the user and the language model, relieving the user of the burden of managing all the technical details of interacting with the AI. These assistants are generally multimodal—that is, capable of processing various types of input and delivering responses in equally diverse formats. For instance, one may upload an image and request its interpretation or text extraction; similarly, it is possible to submit a document, a spreadsheet, or other structured files for analysis. It is also feasible to request that the output be generated not only as text within the conversation, but also as a downloadable document. However, it must be noted that in such cases, the reliability of the result is not always guaranteed.

Both ChatGPT and Claude also offer a voice mode, allowing users to interact with the assistant in real time through speech. The goal is clear: to recreate the experience of a virtual assistant akin to those imagined in science fiction—from the onboard computer of the Starship Enterprise in Star Trek to the AI in the film Her. Unsurprisingly, the first voice selected by OpenAI for ChatGPT closely resembled that of Scarlett Johansson, sparking public debate and ultimately leading the company to replace it with a new voice.

The System Prompt

The system prompt of a conversational assistant is a block of instructions that guides the model's behavior, defines its identity, and regulates its stylistic and operational boundaries. If we imagine the assistant as an actor responding to the user's cues, the system prompt is both the script and the direction.

Anthropic regularly publishes the system prompts used by Claude, providing insight into how conversations within the assistant are designed—and governed. The prompt begins by establishing Claude’s identity:

The assistant is Claude, created by Anthropic.

Claude is further described as an assistant capable of offering not only conversational but also emotional support, with explicit attention to the user’s well-being:

Claude provides emotional support alongside accurate medical or psychological information or terminology where relevant.

The model adopts an empathetic tone, but avoids simulating emotions or internal states. When asked about its own inner experience, it reframes the answer in terms of function:

Claude should reframe these questions in terms of its observable behaviors and functions rather than claiming inner experiences.

And again:

Claude avoids implying it has consciousness, feelings, or sentience with any confidence.

One of the most detailed parts of the prompt concerns the topics Claude is not allowed to address. Some relate to safety:

Claude does not provide information that could be used to make chemical or biological or nuclear weapons.

Claude does not write malicious code, including malware, vulnerability exploits, spoof websites, ransomware, viruses.

Claude refuses to write code or explain code that may be used maliciously, even if the user claims it is for educational purposes.

Others are concerned with personal well-being, health, and behavior:

Claude avoids encouraging or facilitating self-destructive behaviors such as addiction, disordered or unhealthy approaches to eating or exercise, or highly negative self-talk.

Claude is cautious about content involving minors [...] including creative or educational content that could be used to sexualize, groom, abuse, or otherwise harm children.

Claude adapts its tone according to the context. In informal or emotionally charged conversations, it adopts a warm yet restrained style:

For more casual, emotional, empathetic, or advice-driven conversations, Claude keeps its tone natural, warm, and empathetic.

It also avoids artificial enthusiasm:

Claude never starts its response by saying a question or idea or observation was good, great, fascinating, profound, excellent...

Precise instructions similarly govern the writing style:

Claude should not use bullet points or numbered lists for reports, documents, explanations, or unless the user explicitly asks for a list or ranking.

Claude writes in prose and paragraphs without any lists. Inside prose, it writes lists in natural language like: 'some things include: x, y, and z'.

Claude avoids using markdown or lists in casual conversation.

The model adjusts the length of its responses according to the complexity of the question:

Claude should give concise responses to very simple questions, but provide thorough responses to complex and open-ended questions.

Taken as a whole, Claude’s system prompt reveals a structured set of rules that shape the assistant’s relational posture, its scope of action, and its communicative strategies. It is crucial to remain aware of this underlying framework when interacting with a large language model through an interface such as ChatGPT or Claude.

Conversation Management

Each time we write a message in a chat with a conversational assistant, we get the impression that we are interacting with an interlocutor who follows the thread of the discussion, remembers what has been said before, and responds consistently. In reality, what happens behind the scenes is quite different. Let’s examine, step by step, what actually occurs each time we engage with the assistant.

When we open a new conversation and send the first message, the system builds a request consisting of three main elements:

The system prompt is a block of instructions that defines the assistant’s identity, the tone to adopt, behaviors to avoid, and the general guidelines to follow.

Our message, treated as the user’s input to be interpreted and answered.

Any contextual information, such as data retrieved from memory—if memory is enabled—or from external databases. In this case, before sending the prompt to the model, the system performs a search to identify relevant elements for the current exchange and appends them to the prompt.

All these components are sent to the language model (LLM), which processes them and returns a response.

When we write a second message, the process repeats—but with one important difference: before constructing the new prompt, the system retrieves the first exchange (i.e., our initial message and the assistant’s reply) and includes it in the prompt.

At this stage, the prompt sent to the model contains:

The system prompt.

The first exchange (user message and assistant response).

The second user message.

In this way, the model has a complete view of what has already been said and can respond coherently, taking the previous context into account.

With each new message, the system reconstructs the entire conversation by concatenating all previous exchanges. The prompt grows progressively—it becomes longer, includes each new utterance, and is sent to the model so it can produce a response that reflects the entire dialogue history.

This reconstruction takes place at every interaction. The model itself remembers nothing: rather, at each turn, the conversation is re-presented in full, as though every exchange were a new theatrical performance in which the script includes all the preceding scenes.

However, this process has a limitation. AI models cannot manage an unlimited amount of information: there is a maximum threshold—known as the context window—beyond which older messages are discarded. The longer the conversation continues, the more the system must begin to cut the initial exchanges to make room for the new ones. This is when we get the impression that the assistant has forgotten something. In truth, those earlier parts are no longer present in the prompt, and thus the model has no way to take them into account.

For this reason, when working with a conversational assistant, it is advisable to avoid overly lengthy conversations or excessively long texts. The risk is that the model may lose the thread because it has been forced to drop part of the context. In other words, the artificial intelligence has a kind of short-term memory that resembles that of a goldfish more than that of a human being.

Context Management

As we have seen, with each interaction, the conversational assistant dynamically composes a message that includes the system prompt, the user’s instructions, the previous conversation exchanges, and a set of additional elements designed to construct the operational context. Some of this information is provided explicitly by the user—such as instructions, documents, or questions—but many other elements are added automatically by the system, drawing from prior memory, user preferences, uploaded files, or data within a project.

In this section, we will examine in greater detail how conversational assistants manage context through three main mechanisms: memory associated with the user’s account; access to previous conversations; and the handling of local memories within projects or workspaces.

These tools do not function like “human memory,” but rather as modules designed to deliver relevant information to the model, in the right form and at the appropriate moment.

Account Memory

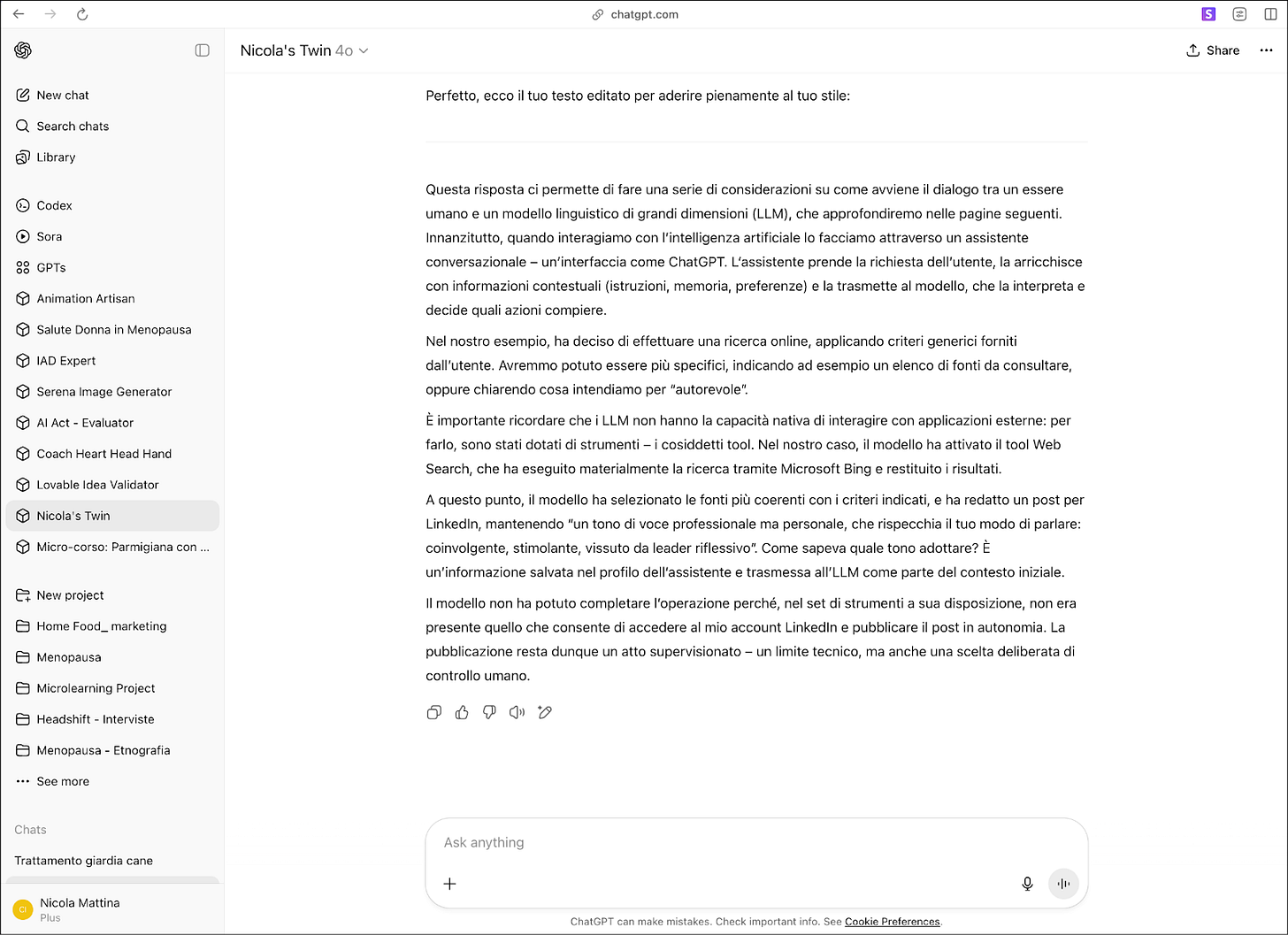

ChatGPT can retain certain key pieces of information about the user, their preferences, and how they wish to interact. For instance, if a user repeatedly requests a sober and analytical writing style or declares that they teach philosophy, the system can store this data and apply it in future conversations.

This memory management is entirely transparent: users can view, modify, or delete the stored information at any time by accessing the dedicated section in the settings. Moreover, it is possible to fully disable the function, preventing the system from saving any new information.

Claude adopts a more cautious approach. It does not have a persistent memory linked to the user account but offers two tools that allow for a certain degree of customization: Personal Preferences and Styles.

Personal Preferences consist of free-form text that the user can fill in to specify preferred approaches, recurring concepts, or typical communication modes. The system uses these inputs as general context across all conversations. Styles, on the other hand, define the tone and form of the responses: they allow for replies to be concise, elaborative, or stylized in particular ways. Unlike preferences, styles do not influence content, but strictly govern the expressive mode in which the text is generated.

Chat History

Starting from the most recent versions, both OpenAI and Anthropic have introduced a feature that allows for the active retrieval of information from previous conversations—either at the user’s request or when the system deems it proper. For instance, the assistant may recognize that a particular topic was already addressed in a past chat and suggest reusing the insights that emerged in that context. To do this, the assistant searches the archive of conversations associated with the account, identifies the relevant ones, and inserts a summary or a selection of pertinent excerpts into the prompt of the current conversation.

The user retains complete control over this process and can disable the feature entirely. In the case of ChatGPT, it is also possible to opt out of memory for a given conversation by using the temporary chat mode, in which no information is stored or reused.

Projects

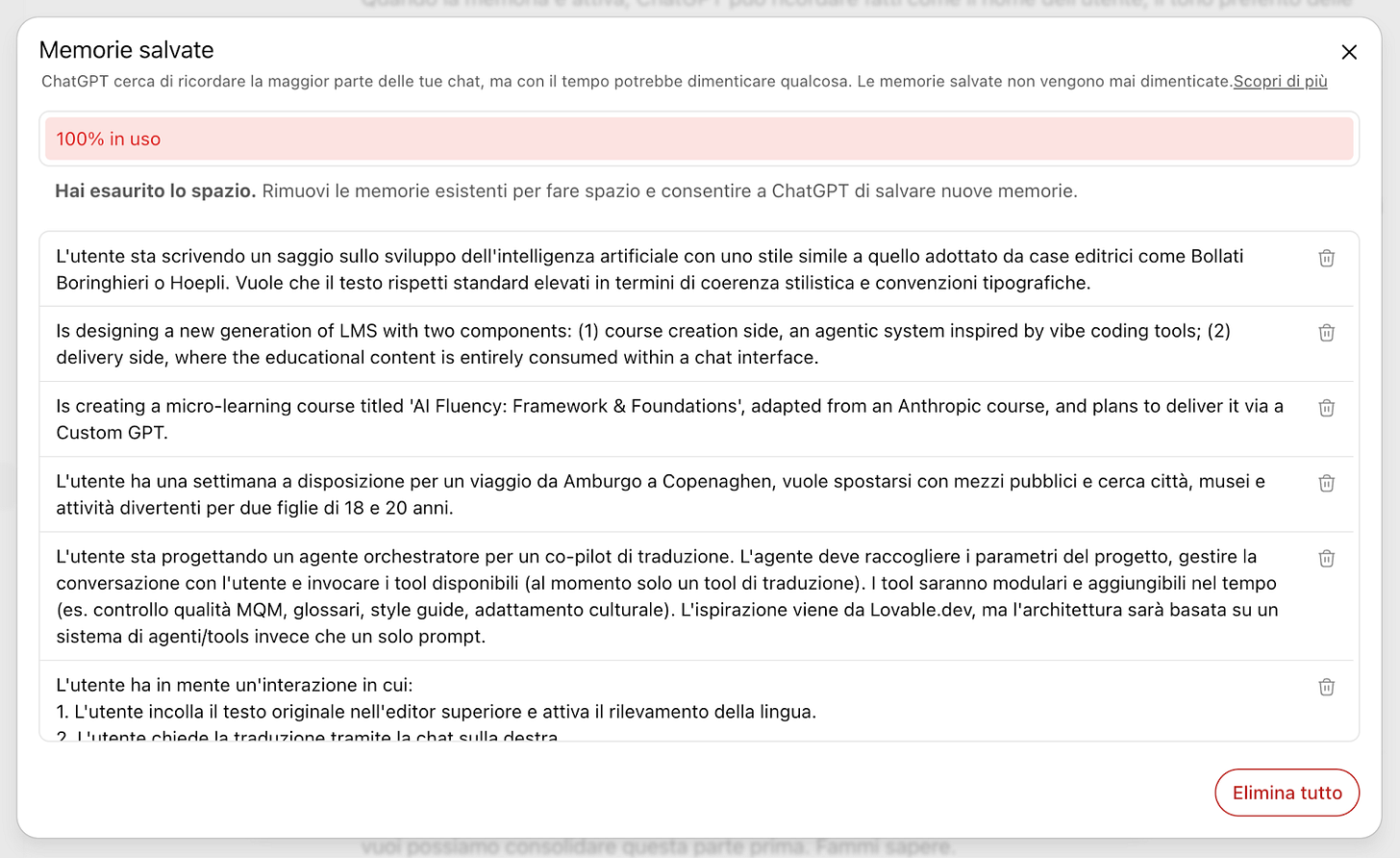

When working on complex or long-term tasks, it is helpful to organize context through projects. These are dedicated workspaces where files, conversations, instructions, and preferences can be collected and structured to ensure operational continuity over time.

Both ChatGPT and Claude offer this functionality, though with two significant differences. The first concerns file management: ChatGPT allows up to 10 documents per project, while Claude does not impose any specific limits. When the number or length of documents exceeds the model’s capacity to “read” them all simultaneously, Claude activates a mode known as RAG—short for Retrieval-Augmented Generation. Put simply, RAG is a system that builds a structured database specifically designed to interface effectively with a language model.

From a technical perspective, this database is vector-based: documents are not stored as plain text. Still, they are transformed into numerical representations (vectors) that capture the semantic content of the sentences. The assistant then uses RAG to retrieve relevant information from all available documents and includes it in the prompt.

The second difference concerns the use of chat history within a project. ChatGPT treats all conversations in a project as shared context, allowing for continuity across multiple exchanges. Claude, on the other hand, treats each conversation as a separate instance.

Web Search

Among the most valuable features of a conversational assistant is its ability to perform real-time web searches. Even the most advanced model has knowledge inherently limited to the point of its training—it cannot know what happened yesterday, which regulations have changed, or what the latest market trends are. For this reason, the ability to consult the web significantly enhances both the accuracy and relevance of the responses.

Both ChatGPT and Claude offer online search tools, although organized according to different principles.

In the case of ChatGPT, the SearchGPT feature (also known as ChatGPT Search) was made available to all users between December 2024 and February 2025, initially for Plus and Team subscribers, and later extended to free-tier users. The system automatically activates web search when a request includes recent temporal references, geographic elements, or requires up-to-date data.

Claude, for its part, introduced its integrated web search function in March 2025, with global availability across all plans starting in May 2025. It uses Brave Search, an independent engine that does not profile users, does not collect personal data, and relies on a proprietary index to deliver verifiable results. The responses include direct citations and are presented in a conversational format, with easily traceable references.

Both ChatGPT and Claude also offer an advanced search mode, based on a multi-agent architecture. In this framework, a primary agent defines the investigative strategy, while secondary agents operate in parallel, consult different sources, develop hypotheses, and verify the evidence. The process may take several minutes, but it results in a detailed analysis supported by precise citations and verifiable documentary references. The outcome is a structured report, often spanning multiple pages, designed to address complex inquiries or questions with high informational content.

Integrations

Among all the features currently available in generative AI systems, the ability to integrate with external tools is perhaps the most recent—and the one attracting the most innovation. The goal is no longer to interact with a model through a chat window, but to embed artificial intelligence within one’s operational ecosystem, enabling it to engage with the documents, projects, and applications we already use daily.

This is the context in which one of the most pivotal concepts of the near future has emerged: the Model Context Protocol (MCP). This open protocol, initially developed by Anthropic and now adopted by OpenAI as well, allows models to connect with external data sources—such as productivity tools, cloud archives, and project management systems—in a controlled, secure, and targeted manner. MCP enables the AI to access specific portions of external content only when necessary, without ever compromising user privacy. It marks a paradigm shift: we are no longer required to explain everything to the system—the system can now observe the context and act accordingly.

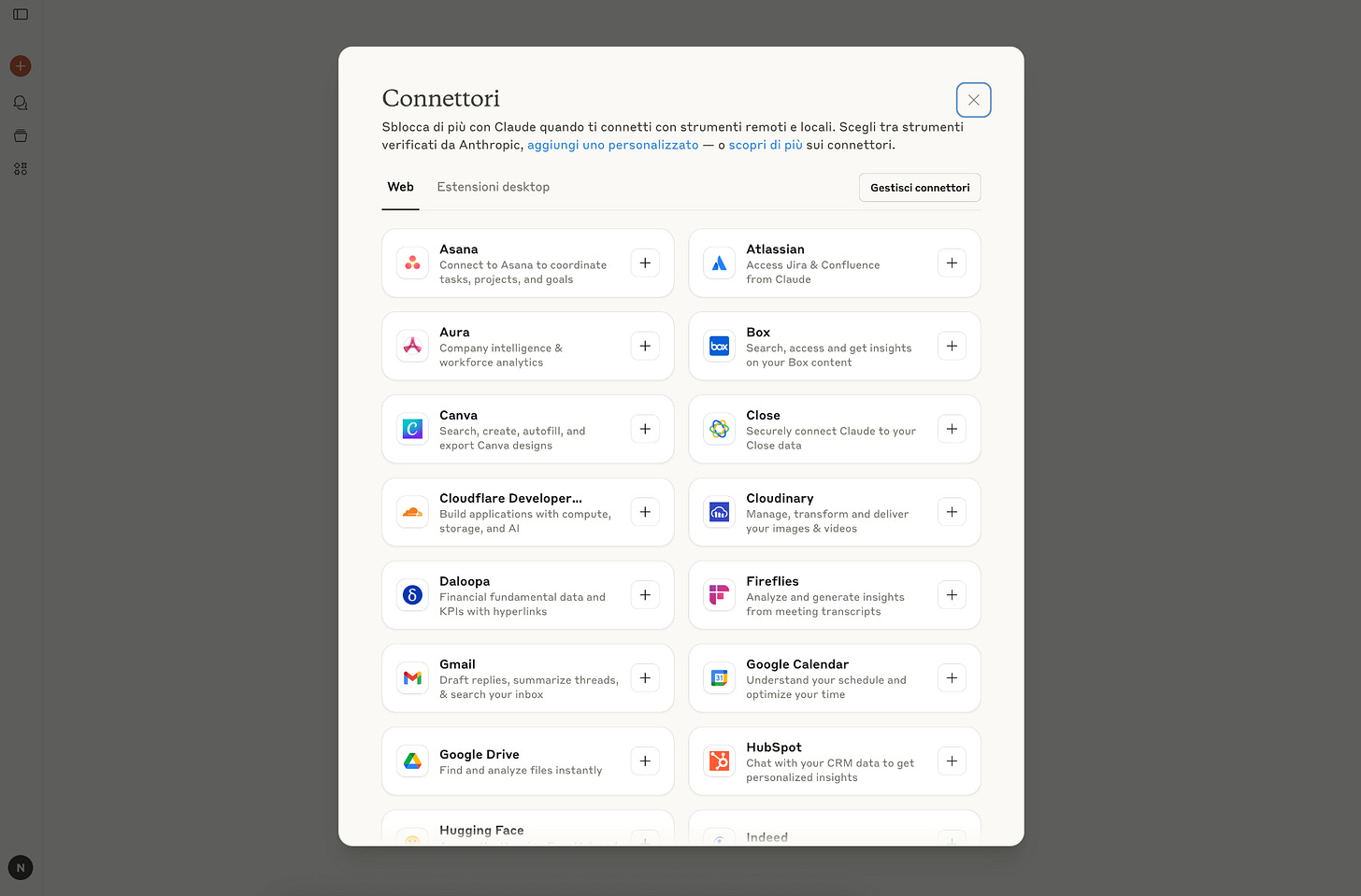

At present, Claude is the client that has most fully embraced this approach. Starting in spring 2025, it introduced an integrations panel directly accessible from the interface. Through MCP, Claude can be linked to tools such as Notion, Slack, Google Drive, Stripe, Canva, Figma, and, more recently, to project management platforms like Asana, Jira, and Zapier. This allows the assistant to access tasks, update progress, read documents, generate reports, or send messages—all within the user’s workflow, uninterrupted.

The advantage is not merely operational. Well-designed integrations allow the model to function within context, accessing project or document data directly and responding with greater accuracy, coherence, and relevance. All of this occurs without the user having to extract, summarize, or paste information manually. One simply authorizes access, defines the boundaries, and Claude operates where needed, when needed.

ChatGPT is also moving in this direction. Since spring 2025, it has begun implementing support for the MCP protocol, paving the way for similar integrations. The shared objective is clear: to transform artificial intelligence from a generic assistant into an operational ally, embedded directly within our working environments.

This transformation is still underway, but its impact is already visible. Integrations are not just a technical feature: they are a natural extension of contextual memory—a mechanism that allows the model to better orient itself, respond more appropriately, and become an active participant in our daily processes.

Learning to use (and configure) these tools will become an increasingly essential skill in the coming months. And gaining familiarity with the concept of MCP—understanding how it works, what it enables, and where its limits lie—will be a fundamental step for anyone seeking to truly harness the potential of generative artificial intelligence in their professional practice.

Off the Record

From Commodore 64 to Vibe Coding: A Leap into the Future

In August, I’ve written two articles about vibe coding (Vibe Coding Unpacked: Promise, Limits, and What Comes Next and From Idea to Prototype: How I'm Building Quibbly with Vibe Coding) and I’ve started gathering stories from people experimenting with these new tools. Some are programmers, others are not, and their experiences swing between excitement and frustration, between the “it works!” and the “nothing works anymore!” moments.

Among these stories, a few days ago Sergio, a LinkedIn connection of mine, reached out to share his own experience.

Sergio is a 56-year-old entrepreneur (I’m 55, and also an entrepreneur). He has never been a programmer, but — like me back in the 1980s — he had a Commodore 64 and played with BASIC. His passion for computing never went away, lingering quietly alongside a sense of “missed opportunity”: having ideas but not the tools to turn them into software. Until the summer of 2025.

Two Weeks and a Game-Changing App

When a planned trip was suddenly canceled, Sergio found himself with two free weeks. He decided to try out Lovable, backed by Supabase and a handful of external services. Budget: €500.

“Honestly, I started as if it were just an experiment,” he recalls. “I didn’t expect to end up with something that actually worked.”

Fifteen days later, without knowing how to write code or even an SQL query, he had built a management system that automates more than 400 quotes a year — saving his business tens of thousands of euros.

The system integrates with a relational database of more than 40 tables, generates Word and PDF documents from templates, syncs with billing and time-tracking tools, and consolidates data that used to live across multiple apps and spreadsheets.

“It’s not a product I could sell,” he admits, “but it does exactly what I need. And above all — I built it myself. That’s already a huge satisfaction.”

From Prototype to Crash Course

The project began with a single vague prompt: generate a few tables. Then Sergio imported some historical data, played around, and soon new ideas for features began to surface.

“At first, every new tool seemed easy. You write a half-clear prompt, the AI does its job, and it works. But as the project grew, I had to be more and more precise. Sometimes the AI could fix a bug instantly; other times, it was like banging my head against the wall.”

He learned to debug step by step, often keeping Lovable, Supabase, and GitHub open side by side. “To move forward, I had to understand what was really going on in the code. ChatGPT was a huge help, explaining React, JSX, databases, and even suggesting which libraries or services to use.”

The hardest part? Integrating APIs not natively supported by Lovable. “That was time-consuming,” he says. “The AI struggled to read the docs. In the end, I had to make the logic choices myself.”

There were also moments of frustration: “Sometimes it felt like working with someone brilliant who instantly delivers. Other times, like dealing with a colleague who randomly breaks code that was working fine.”

The Numbers

15 days of work

3,000 credits used

$700 spent

50 pages built

130 React components

42 database tables

15 edge functions

1,000 mistakes made

“The biggest mistake,” Sergio admits, “was starting without a clear project plan. If I could start over, the result would be many times better.”

A Micro-Niche of Creators

Sergio’s story is not just about one app. It represents a broader shift: the rise of people, not trained as developers but passionate about technology, who can now build tools tailored to their own needs.

It feels similar to what happened in the early 2010s with the maker movement in hardware. Back then, cheap 3D printers, Arduino boards, and Raspberry Pis allowed thousands of people to build physical devices they never could have prototyped before. It wasn’t about mass-market products — it was about personal projects, experiments, and small-scale tools that made a difference.

Now, the same thing is happening in software. Thanks to AI and vibe coding, people who once believed they had “missed the train” can finally turn ideas into working applications. They’re not polished unicorn startups, but functional solutions that save money, boost efficiency, and provide the deep satisfaction of creation.

As Sergio put it: “It works, it saves me time and money — and I built it myself.”

Curated Curiosity

Why do language models “hallucinate”?

A recent paper from OpenAI explains that hallucinations (when AI makes up things that sound plausible but are false) are not mysterious errors. They are the direct consequence of how we train and evaluate models.

Imagine a student taking a test. If they leave an answer blank, they get 0 points; if they guess and get it right, they get 1 point. What will they do? They’ll always try to answer, even when they don’t know. Language models behave in the same way.

The key insight is that current benchmarks reward risk over humility. So AI learns always to answer, even when it should say: “I don’t know.”

The authors’ proposed solution is simple but powerful: change the rules of the game. Reward models that recognize uncertainty. Penalize false statements delivered with too much confidence.

This strategy will not eliminate hallucinations, but it will be possible to reduce them and build more reliable systems that earn our trust.

OpenAI, Why Language Models Hallucinate

Defeating Nondeterminism in LLM Inference

Thinking Machines—the new company founded by Mira Murati, former CTO of OpenAI—has published its first article, addressing a subtle yet important issue: the nondeterminism of language model assistants.

In simple terms, even when we ask the same question twice to a model that is set up always to give the same answer (with no creativity, in a fully “deterministic” mode), the output may still vary—maybe a different word, a rephrased sentence, or a detail that disappears or reappears.

This behavior stems from the way computers perform calculations. Processors, especially when working in parallel and handling multiple requests at once, don’t always add or order numbers in the same way. It’s a bit like having two people count a large pile of coins: the total will be the same, but if they group them differently along the way, slight variations can appear in the intermediate steps.

For the everyday user, it’s not a dramatic issue. But for researchers, developers, or anyone comparing models, the fact that the same question may produce slightly different answers makes it harder to verify results or replicate an experiment.

The team suggests an approach called batch invariance, which essentially forces the model to perform calculations in the same order every time, regardless of the number of requests it is processing simultaneously. This way, the same question consistently produces the same answer.

Thinking Machines, Defeating Nondeterminism in LLM Inference