How I Started Building Agentic Systems

Starting a new series on agentic AI systems. In this first article, I share how I’m experimenting with building a system to streamline LinkedIn engagement.

First, thank you to the over 1,000 subscribers who follow this newsletter 🥳.

This article kicks off a series dedicated to agentic AI systems and their practical applications in business workflows. Over the coming weeks, I’ll share my experiences, insights, and tips for building and embedding these systems to optimize processes and achieve meaningful outcomes.

To begin with, I’ll introduce the concept of agentic AI, explain its value, and share the initial steps I’ve taken to develop a system designed to help me manage my personal brand on LinkedIn.

Table of Contents

Building an Agentic System with Relevance.ai

Building an Agentic System with Relevance.ai

Like many others, I’ve incorporated ChatGPT into my daily routine, relying on it for brainstorming, drafting, and problem-solving. I’ve also delved into prompt engineering, following numerous tutorials to refine my skills. Recently, however, I’ve ventured beyond simple interactions to experiment with agentic AI—a more complex and layered approach to leveraging artificial intelligence.

Transitioning from conversations with ChatGPT to configuring a system of AI agents is a significant leap. While no-code and low-code platforms are making this technology more accessible, the process still requires a foundational understanding of programming logic and, ideally, some familiarity with a programming language such as JavaScript or Python.

Understanding Agentic Systems

Before delving deeper, let’s define what an agentic system looks like. At its core, such a system is a network of interconnected AI agents, each assigned specific tasks and capabilities. IE:

Customer Support Automation: An agent triages incoming customer queries, another retrieves relevant data, and a third generates responses based on predefined policies.

Content Production Pipelines: One agent handles research, another drafts content, and a third polishes it for publication.

Data Analysis Workflows: An agent collects data from various sources, another organizes it into a digestible format, and a third generates insights or visualizations.

These systems showcase how multiple specialized agents can collaborate to achieve complex goals. Numerous examples and tutorials are available on YouTube. For instance, Ben Van Sprundel uses Relevance.ai and Make.com to build an AI Agent Army that assists with day-to-day tasks.

Similarly, in another tutorial, Nate Herk leverages n8n to achieve a comparable goal by creating a personal assistant:

Designing an Agentic System

Creating an agentic system begins with analyzing the process you want to optimize. This means breaking it down into high-level stages.

For instance, I aim to strengthen my positioning as a product leader by showcasing my expertise in cognitive SaaS, my enthusiasm for agentic AI, and my interest in edtech. I plan to use LinkedIn as my primary platform for this purpose. Among the activities suggested by experts, I decided to start by actively commenting on posts from people in my network or creators within the niches I’m interested in to increase visibility and reach.

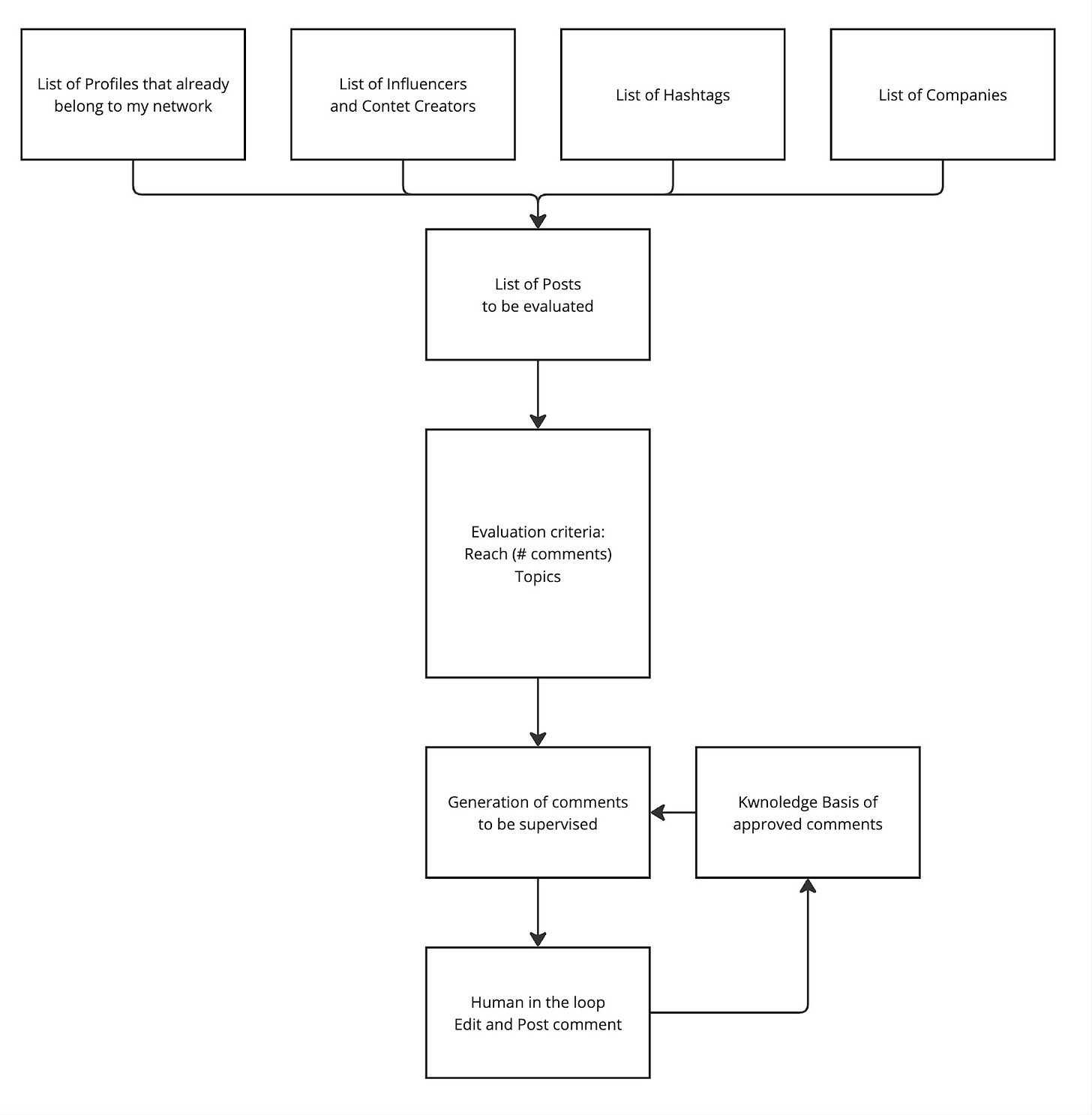

This process flow outlines a streamlined approach to generating, supervising, and posting quality comments, balancing automation with human oversight.

1. Identifying Relevant Content

The first step involves gathering a list of profiles already in your network, as well as influencers, content creators, hashtags, and companies aligned with your interests or goals. These sources combine to create a List of Posts to be Evaluated.

2. Evaluation and Prioritization

Once posts are identified, they are assessed using evaluation criteria like:

Reach: Measured by the number of comments, indicating a post’s visibility and engagement potential.

Features of the Post: Posts should include insightful content and offer opportunities where a well-thought-out comment can genuinely contribute value to the discussion.

3. Generating Insightful Comments

The agent will follow explicit rules to ensure comments remain relevant and avoid appearing mechanical—a common pitfall of AI-generated content today. For every post, it will generate three possible comments, each with distinct formats and perspectives, ensuring authenticity and adding meaningful value to the discussion.

4. Human-in-the-Loop Supervision

While automation speeds up the process, human oversight is critical. A reviewer edits and refines each comment to ensure it is authentic, relevant, and adds value before posting. This step maintains quality and prevents comments from feeling generic or robotic.

5. Continuous Improvement

Every approved comment will be stored in a knowledge base. The goal is to teach the LLM my style and tone, enabling it to generate comments that feel authentically human.

Thinking of Agents as Specialized Interns

To transform this high-level workflow into an agentic system, I’ve found it helpful to think of agents not as mere components of a workflow but as a team of highly specialized interns. Each agent is equipped to use one or more tools.

In our example, we will have just one agent with whom I will interact that will use three tools.

The first step is to develop and test the tools to ensure they work as intended. Once validated, these tools can then be assigned to one or more agents:

Tool A scrapes comments daily and classifies them based on a set of predefined rules.

Tool B generates draft comments and sends me an email notification when new comments are ready.

Tool C records my edits to the comments, posts them to LinkedIn, and updates the knowledge base accordingly.

Each morning, the agent activates the LinkedIn Scraper Tool and waits for the results before triggering the Comment Generation Tool. The Comment Generation Tool then emails me draft comments for review. I edit or approve these comments and post them on LinkedIn.

I aim to keep this process under 10 minutes daily while continuously improving the system’s accuracy. Over time, I want the system to become fully autonomous.

Developing a Tool

For my initial experiments, I chose to use Relevance. As I lacked development skills, I collaborated with my engineer buddy Daniele Antonini. I focused on prompt engineering, ensuring the AI performed as expected, while he managed the technical aspects of building the tool. This process required coding skills, particularly for scripting, to ensure everything operated seamlessly.

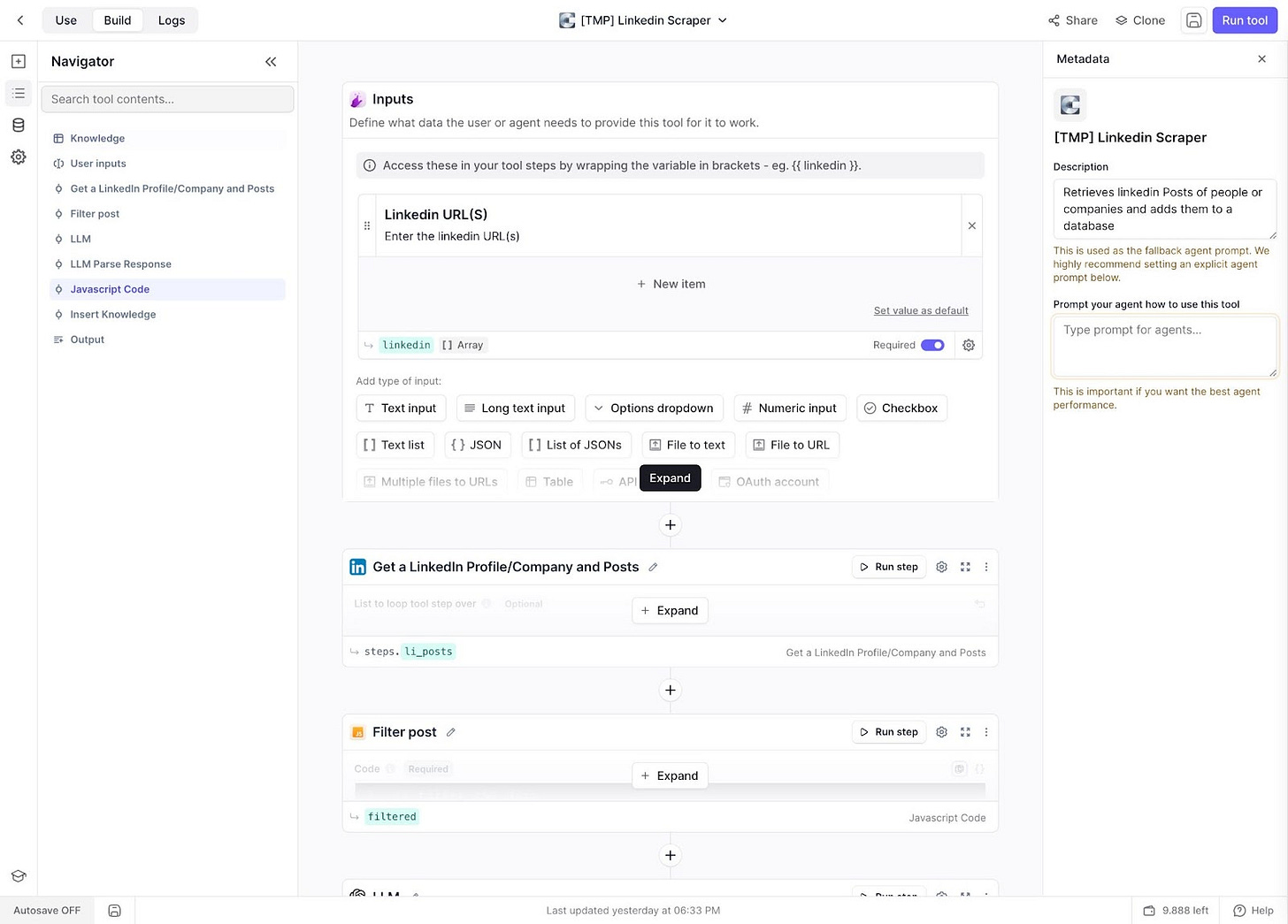

In Relevance, a tool is built as a series of steps. This screenshot illustrates the structure and functionality of the scraping tool:

As you can see from the left sidebar, the LinkedIn Scraper Tool is composed of several sequential steps designed to automate the process of fetching, classifying, and storing LinkedIn posts:

User Inputs: This is the starting point where the user or another system process provides a list of URLs. These URLs represent the LinkedIn profiles that need to be analyzed.

Get a LinkedIn Profile/Company and Posts: In this step, the tool leverages Relevance’s integration capabilities to fetch posts based on predefined criteria.

Filtering the Fetched LinkedIn Posts: Once the posts are collected, a filter is applied to refine the results.

LLM: At this stage, ChatGPT or a similar language model classifies the filtered posts. This step involves categorizing the content based on predefined rules or topics, such as identifying posts with high engagement potential or those discussing specific themes.

Additional Filtering and Data Preparation: After classification, the results undergo two further filtering steps to transform the data into a structured format.

Add to Knowledge: Finally, the posts are added to a database. This repository will serve as the starting point for another tool, the Commenter, which retrieves the most relevant posts and generates tailored comments.

How I Create Prompts

One of the foundational practices in building agentic AI systems is mastering prompt engineering. I follow a structured role-task format, clearly defining the AI’s role and the specific task I want it to perform. For instance, I might begin with, “You are an expert prompt engineer tasked with crafting an optimized prompt for a specific use case.”

Once the initial prompt is drafted, I use an iterative approach in collaboration with ChatGPT. This method, often called iterative prompt engineering or iterative refinement, emphasizes a feedback loop between the user and the AI. The process involves several key steps:

Initial Drafting: Use the role-task format to create a clear and concise prompt that establishes the context and goal.

Feedback and Reflection: Engage the AI in analyzing the prompt’s effectiveness, asking for insights into what works and what could be improved.

Refinement: Modify the prompt based on the AI’s feedback, ensuring alignment with the desired outcome.

Testing and Validation: Evaluate the revised prompt by running scenarios and confirming it produces consistent, high-quality results.

The LinkedIn Scraper Tool identifies posts worth commenting on. The criteria I’ve chosen ensure that the posts selected are rich in content and provide sufficient starting material for meaningful interaction. Specifically, posts should contain:

Enough Content: Posts with substantial text are prioritized, as they offer a more explicit context for generating comments.

Data: The presence of data or statistics adds credibility and depth, making it easier to craft insightful responses.

Opinions and Insights: Posts that share unique viewpoints or insights foster meaningful discussions and provide ample opportunities to contribute value.

Posts meeting all these criteria are worth commenting on because they offer enough material to generate meaningful and contextually relevant comments. The following is the prompt that I’m currently testing:

Evaluate the LinkedIn post based on the following criteria, assigning up to 3 points per criterion:

Thought-Provoking or Data-Driven Content

- 0 points: The post is generic, lacks depth, or is purely promotional.

- 1 point: The post contains some interesting ideas or insights but lacks originality or depth.

- 2 points: The post presents new insights, trends, or data but could benefit from more specificity or broader context.

- 3 points: The post offers unique insights, actionable trends, or detailed data that provoke thought or inspire discussion.

Strategic Hashtags

- 0 points: No relevant hashtags, or hashtags are irrelevant to your interests.

- 1 point: The post has 1–2 relevant hashtags, but they are not highly aligned with your niche.

- 2 points: The post uses 2–3 hashtags that are moderately aligned with your professional focus.

- 3 points: The post includes 3 or more relevant hashtags directly tied to your areas of interest or expertise.

Authenticity and Depth

- 0 points: The post feels superficial, overly polished, or lacks genuine depth.

- 1 point: The post shows some level of authenticity or depth but lacks a strong narrative or personal perspective.

- 2 points: The post is moderately authentic and shares valuable perspectives, but could be more engaging or detailed.

- 3 points: The post is highly authentic, shares meaningful insights or experiences, and encourages deeper reflection or discussion.

Scoring Guidelines

Add the scores from all three criteria to get a total out of 9 points.

A post scoring 7–9 is highly valuable and worth engaging with.

A score of 4–6 indicates moderate potential—engage if you can add significant value.

Posts scoring 0–3 are unlikely to be worth commenting on.This post by Nataly Kelly got an overall score of 8:

Authenticity and Depth = 2

The post is informative and shares valuable insights, but it could benefit from a more personal touch or narrative to enhance engagement. While it discusses the importance of consumer insights, it lacks a personal perspective or story that could make it more relatable.Strategic Hashtags = 3

The post includes relevant hashtags such as #consumertrends, #advertising, and #innovation, which are well-aligned with the content and likely to attract the right audience.Thought Provoking = 3

The post provides detailed insights into the effectiveness of advertising strategies for quick service restaurants, supported by specific data points regarding brand recall and purchase uplift. It presents actionable trends and encourages deeper thought about the relationship between product presence in ads and consumer response.

The LinkedIn Scraper Tool is far from perfect. Subsequent iterations will include more sophisticated classification capabilities to refine its selection process further. These enhancements will focus on identifying various types of posts, such as text-only updates, videos, or newsletters. Additionally, the tool will classify posts by their general argument or purpose, distinguishing between announcements, link sharing, or in-depth analysis.

These features aim to make the tool more nuanced and adaptable, ensuring that it continues aligning with my goal of producing high-quality, meaningful interactions on LinkedIn.

In the following article, I’ll explain how to connect the tools to the agent and share a demo of the agentic system. Stay tuned!

If you were forwarded this email or if you come from a social media, you can sign up to receive an article like this every Sunday.Thanks for reading this episode of my newsletter. I hope I’ve been helpful. If you think my sketchbook might interest someone else, I’d appreciate it if you shared it on social media and forwarded it to your friends and colleagues.

Nicola