The AI Collaboration Canvas: How to Map Workflows and Delegate Tasks to Artificial Intelligence

The AI Collaboration Canvas helps teams break down complex workflows and choose the right strategy to delegate tasks to AI.

Ciao,

Over the past few weeks, I’ve been working on creating and refining a canvas to help teams design their collaboration with AI and integrate synthetic colleagues to whom they can delegate specific tasks. This week, I had the chance to test it during the Product Heroes Conference in Milan.

The reactions from workshop participants were typical of an Aha moment — a clear sign that the canvas addresses a real need. That’s why I’ve decided to share this first version publicly, to collect feedback and continue to improve it. Below, you’ll find a short guide on how to use it and a downloadable PDF template.

In the coming weeks, I will be hosting free online seminars. If you’re interested, you can reply to this email or fill out this form: https://forms.gle/z9RmBiNAGy9Rrhqy6

Enjoy

Nicola ❤️

Understanding AI

The AI Collaboration Canvas: How to Map Workflows and Delegate Tasks to Artificial Intelligence

Delegating to a language model is not the same as delegating to a colleague. When a task is assigned to another human being, one can rely on a shared context: an understanding of business priorities, familiarity with the industry, and the ability to make reasonable inferences even when instructions are partial or ambiguous. A colleague can ask questions, propose alternatives, or adjust the course if circumstances change. None of this can be taken for granted with AI.

A large language model has no memory of past interactions, is unaware of your goals, and cannot query you for clarification. It can only interpret what you write—and if the instructions are vague or contradictory, the output will inevitably be inaccurate, generic, or off target. To further complicate matters, artificial intelligence is not a single, monolithic tool. Instead, it is a heterogeneous set of technologies and usage modes, each with its own specificities and limitations.

Saying “I am delegating to AI” can mean many different things. One might initiate an exploratory conversation with a conversational assistant to generate ideas, create a reusable prompt for systematic data analysis, or utilize software that fully automates a mechanical task. These are three distinct scenarios, each requiring a different approach.

How, then, should one choose the right delegation strategy? There is no universal answer. Each task must be analyzed for what it is: how repeatable it is, how structured it is, and what level of judgment it requires. To assist in conducting this analysis systematically, I developed the AI Collaboration Canvas: a practical tool for mapping a process and assessing its delegability. The canvas unfolds in two phases: the first entails breaking down the workflow into concrete activities; the second involves evaluating each activity along two dimensions—automation and cognitive complexity—which yield four distinct strategies for interacting with AI:

Brainstorming with AI

Reusable Prompt

Automated Tool

Keep It Human

Each strategy represents a distinct approach to collaborating with artificial intelligence. The choice always depends on the task's specificity, not on technological hype or trendy slogans.

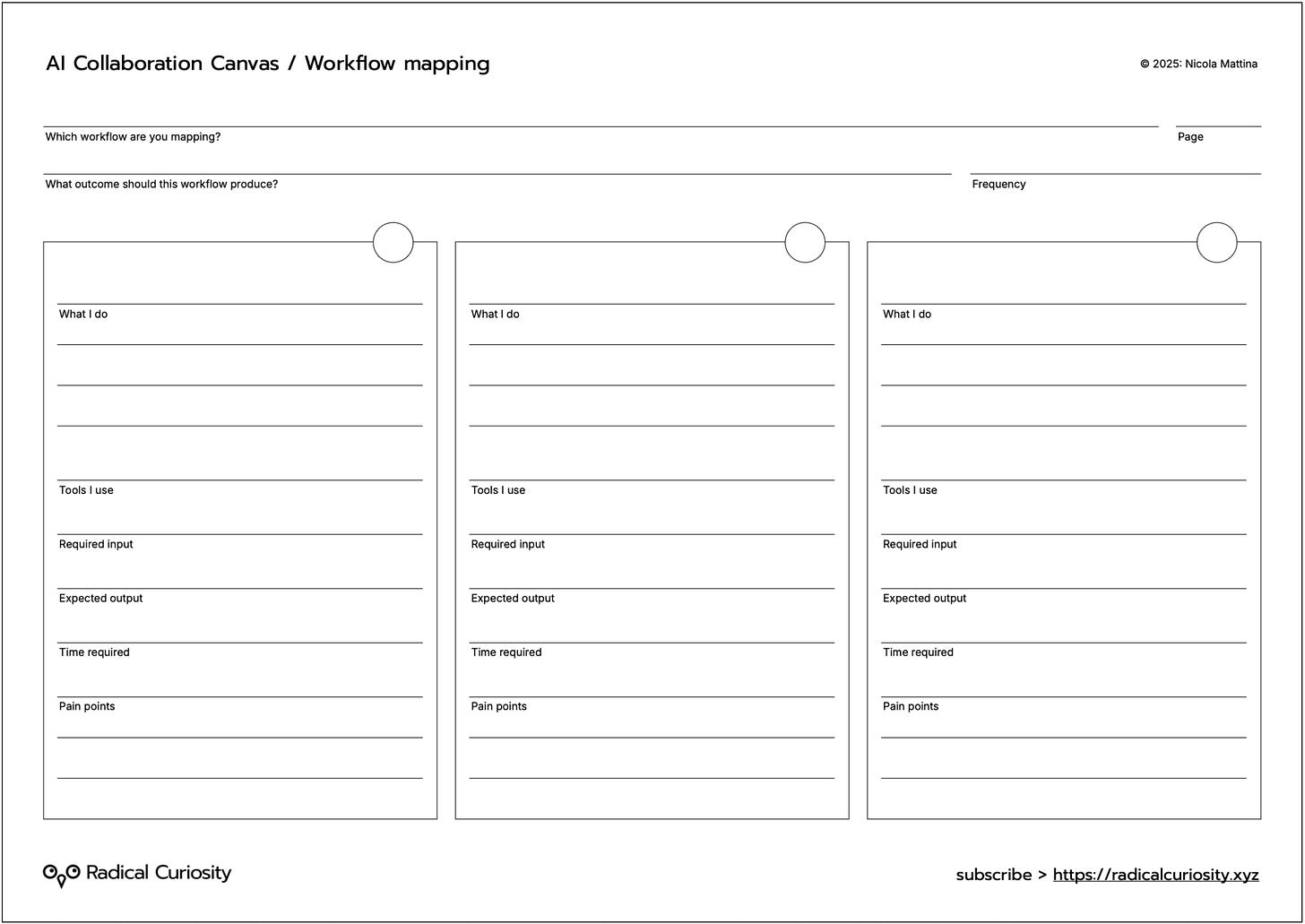

Phase 1. Workflow Mapping

Many people have a solid understanding of their areas of responsibility. They can describe what they do in general terms (“I manage the backlog,” “I coordinate the team,” “I conduct user research”), but when it comes to explaining how they perform a specific task in detail, their narrative tends to become more blurred.

Let’s consider a simple example: the last time you wrote a report. How much time did it actually take? What were the exact steps involved? Did you need to retrieve data from multiple systems? Did you copy and paste content from other files? Did you format charts, correct typos, and reread several times? And, more importantly, how much of that time created real value, and how much was spent on repetitive or tedious tasks?

If you can’t answer immediately with precision, don’t worry—this is entirely normal. Most people carry out their work automatically, without actively observing it.

Mapping is a way to make visible what has become routine through repetition. It helps identify, with precision, where there is room for improvement through effective delegation.

The first decision concerns choosing the proper process. A good candidate has three key characteristics:

It is repetitive. It’s a task you perform regularly—weekly or monthly. Avoid one-off processes: even if they could be delegated, their impact would be minimal.

It is time-consuming. It doesn’t have to be lengthy, but over the course of a month, it should have a noticeable impact on your productivity. A 40-minute task repeated four times becomes nearly half a day’s work. Regaining even half that time means creating space for more strategic activities.

It has a recognizable structure. Some processes are too complex or entangled to be broken down effectively. Begin by focusing on activities with a clear start, defined steps, and a tangible outcome.

For example: in management control, you might map out the preparation of the monthly forecast, which involves data collection, normalization, synthesis, and review; in HR, the initial screening of CVs is a repetitive process requiring time and focus; in marketing, producing the monthly newsletter follows recurring steps, from content selection to performance analysis; in sales, creating customized proposals involves similar actions each time, though tailored to context; in customer care, managing recurring email or ticket requests is an evident candidate for delegation, especially in its more mechanical phases. And so on.

Once the process is identified, it must be broken down into steps. The right level of granularity describes a meaningful activity with a clear start and end, lasting between 5 and 30 minutes.

For each step, the Canvas provides six fields to consider:

What I do. Describe the task concretely. For example: “I select and download data from the database in CSV format,” “I turn the information my colleagues send me into short news items for the internal newsletter.”

Tool used. What tool or platform do you use? Even “none” is a valid answer if the task is mental or manual.

Input required. What do you need to begin? Data, documents, prior decisions, and collected information.

Output produced. What does the step generate? A document, a file, a list, a decision? It must be tangible.

Time spent. Estimate the actual time, without idealizing it positively or negatively. Include interruptions, errors, and repeated attempts.

Pain point. What aspects frustrate you? Is it tedious, repetitive, error-prone,or overly dependent on others? Pain points often signal the clearest opportunities for delegation.

Phase 2. Task Evaluation

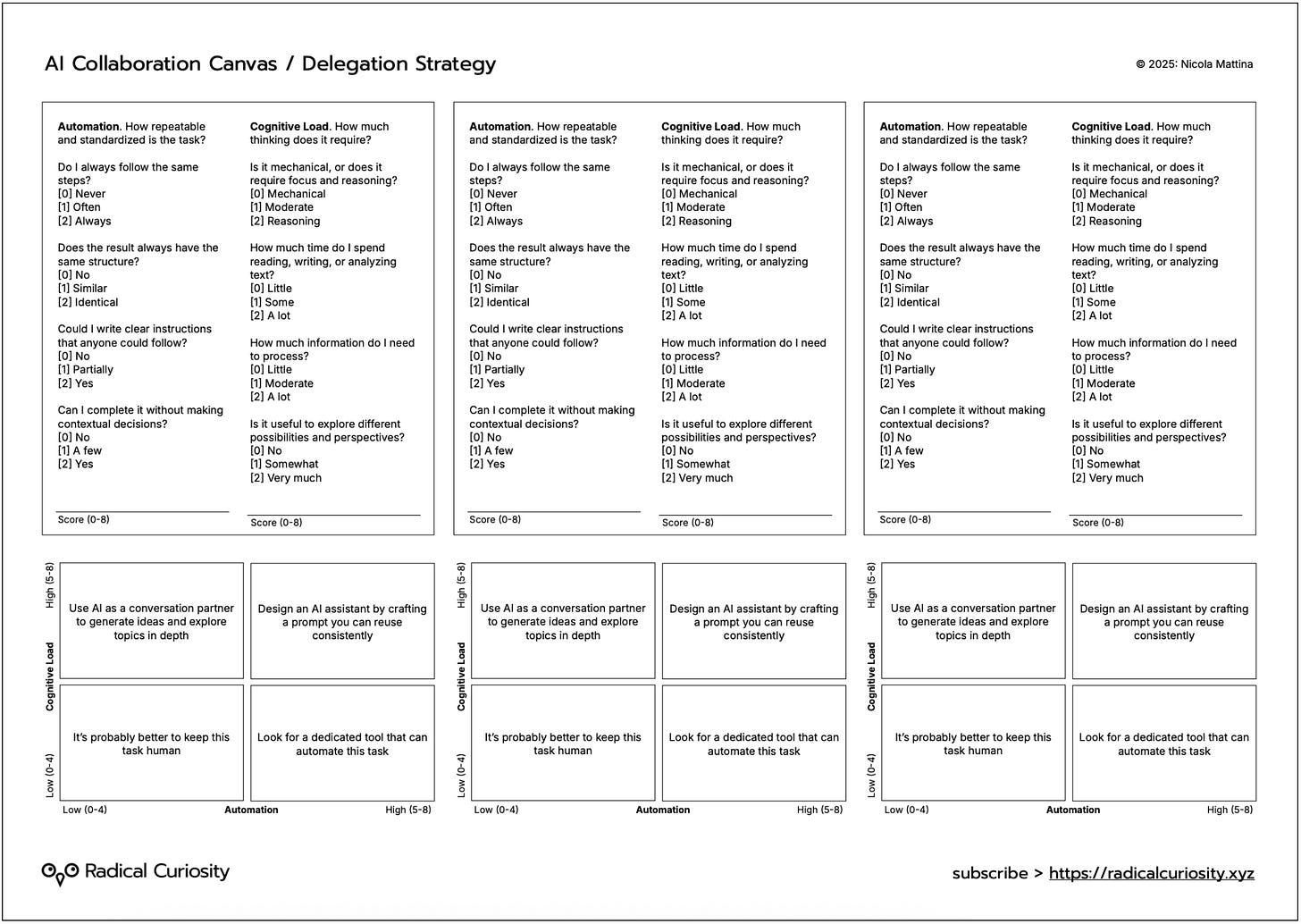

Once the mapping is complete, you have a concrete and detailed representation of your workflow, step by step. But operational clarity alone is not enough to determine what—and, more importantly, how—to delegate. To arrive at a strategy, a second phase is necessary: evaluating each step along two independent yet complementary dimensions—automation and cognitive load.

The first dimension concerns how regularly the task follows a fixed pattern. A highly automatable activity always follows the same structure: same steps, same output, same rules. It doesn’t necessarily require the use of AI—an Excel sheet with macros or a dedicated application may suffice—but it implies the process is formalizable and repeatable.

The second dimension measures the mental effort required. If a task involves reading, writing, interpretation, or content generation, it likely carries a high cognitive load. In such contexts, large language models prove particularly effective.

The intersection of automation and cognitive load produces four distinct scenarios, each corresponding to a specific delegation strategy. But before exploring the matrix, both dimensions must be assessed systematically.

The Scoring System

For each workflow step, answer eight questions: four related to automation, four to cognitive load. Each response is scored on a scale of 0 to 2 points. The purpose of this assessment is to make the operational characteristics of each task explicit, enabling informed decisions on how to treat them.

Automation. How standardizable is this step?

Question 1 – Do I always follow the same sequence of steps?

[0] Never. Each time is different; there is no fixed procedure.

[1] Often. Similar procedure, but with frequent variations.

[2] Always. Always identical procedure, same sequence.

Question 2 – Does the result always have the same structure?

[0] No. The output changes every time.

[1] Similar. A basic structure exists, but with some variability.

[2] Identical. Output is always identical in form.

Question 3 – Could I write clear, detailed instructions?

[0] No. Requires intuition and experience.

[1] Partially. Guidelines are possible, but require interpretation.

[2] Yes. A step-by-step manual is easily replicable.

Question 4 – Can I complete it without making contextual decisions?

[0] No. Constant decision-making throughout the process.

[1] A few. Occasional, isolated decisions.

[2] Yes. No decisions required, pure execution.

Cognitive Load. How much thinking and language does it require?

Question 5 – Is it mechanical, or does it require focus?

[0] Mechanical. Completely mechanical.

[1] Moderate. Requires moderate attention.

[2] Reasoning. Requires continuous reasoning.

Question 6 – Do I primarily work with language?

[0] Little. Visual, operational, or procedural task.

[1] Some. Language is present, but not central.

[2] A lot. Reading/writing are the dominant activities.

Question 7 – How much information do I need to process?

[0] Litte. Few, easily manageable data points.

[1] Moderate. Moderate volume.

[2] A lot. Multiple sources, a large amount of content.

Question 8 – Are there multiple ways to perform the task?

[0] No. Only one correct way.

[1] Somewhat. Some possible alternatives.

[2] Very much. Task benefits from exploration.

The 2×2 Matrix: Four Delegation Strategies

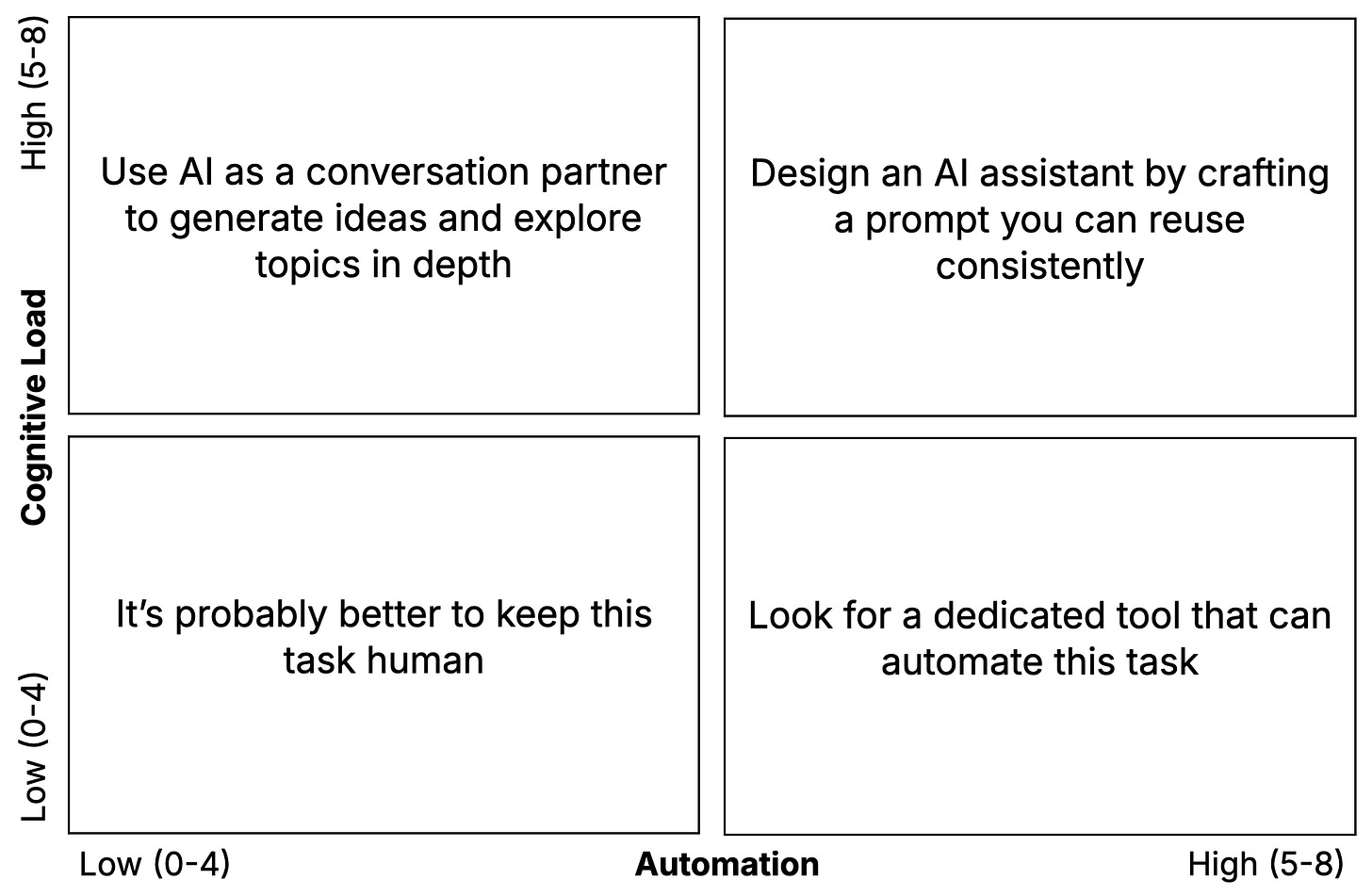

Once the scores have been assigned, you’ll have two values ranging from 0 to 8: the first measures how automatable a step is, the second how cognitively demanding it is. The combination of these two allows you to position each activity within a 2×2 matrix, from which four distinct operational strategies emerge.

Brainstorming with AI

When a task scores low on automation but high on cognitive load, it means it cannot be standardized—it never repeats the same way—and it requires significant reasoning, creativity, and exploratory thinking. These are tasks that require careful consideration: designing a new feature, drafting an interview guide, defining a product strategy, and co-designing a solution with a team. In such cases, talking about “automation” is misleading: there is no repeatable sequence to delegate, but rather a problem to explore.

Here, AI should be used as a thinking partner. Start with a well-defined context, ask open-ended questions, assess proposals, go deeper, iterate. The value of AI lies in its ability to rapidly explore multiple options, leverage advanced search capabilities, analyze hundreds of sources in minutes, and so on.

AI Assistant

Some tasks are cognitively demanding yet highly repetitive. The input changes each time—a new transcript, a different set of feedback, another report to summarize—but the process and the output structure remain consistent. In these cases, AI can perform the task autonomously, but it requires very clear instructions.

The most effective strategy is to formalize the process through a well-crafted prompt. A generic command (“analyze this transcript”) is not enough: you need a document that precisely defines the AI’s role, the type of input to analyze, the structure of the expected output, and the rules to follow. This prompt becomes a reusable asset: create it once, test it on real cases, save it, and reuse it as needed by updating only the input data.

AI Tool

Some activities are so operational and repetitive that they require virtually no human intervention. These are simple, procedural tasks with a fixed sequence and standardized output. In such cases, the right strategy is to identify a specialized tool that already performs the task. Think of software that transcribes calls automatically or tools like Grammarly that enhance your writing style.

To implement this type of delegation, you need to select the right tool, configure it to meet your needs, test it on real-world examples, and then let it run. Using a general-purpose tool like ChatGPT for such tasks is often inefficient when an optimized solution already exists.

Keep It Human

Not everything should be delegated to AI. Some tasks are so quick, simple, or specific that they’re more efficient when handled directly. These are loosely structured, often spontaneous tasks with changing context and limited strategic relevance. In such cases, involving AI may slow you down rather than help. The right approach is not to delegate—or, at most, use AI as a side assistant, for instance, to polish the tone of a hastily written message. But overall management remains manual.

Not every step will fall neatly into a single quadrant. Some will be borderline—scores of 4 or 5, 6 or 7. In such cases, consider the context and decide whether to keep the task in its assigned quadrant or move it to an adjacent one by reconsidering one of the scores. The scoring system is not a mathematical truth—it’s a lens. Its purpose is to help you think better, not decide for you.

A Concrete Example

To clarify the method, let’s analyze a typical process in product management: conducting qualitative user interviews.

This is a key activity for gathering firsthand insights into people’s real needs, frustrations, everyday behaviors, and the context in which they use (or could use) a product. These conversations help the team break free from their own assumptions and develop more relevant, user-centered solutions.

It’s a process that requires a significant investment of time and energy: preparing interview guides, conducting conversations, transcribing, analyzing, and synthesizing. For this reason, especially in small or overworked teams, qualitative interviews often end up being postponed, shortened, or excluded from the development cycle altogether.

Qualitative interviews meet all three criteria discussed earlier and represent an ideal case for experimenting with systematic AI support. They are recurring tasks, cyclically present in the team’s work. While not complex in every single phase, they add up to a significant effort over time. They also have a recognizable structure: each interview has a clear beginning (goal setting and user profile definition), codifiable steps (drafting the guide, data collection, and analysis), and a tangible output (structured insights, an empathy map, and a report). These characteristics make the process not only suitable for delegation but particularly effective: even partial AI support can reduce time, maintain quality, and ease cognitive load.

Phase 1. Workflow mapping

If you’ve never conducted a qualitative interview, below is an overview of the main steps, with a short description of what each entails and the most common difficulties encountered:

Define the target profile

The first step is to clearly identify the type of person you want to interview. The goal is to create a document that describes the key characteristics of the desired profile (e.g., professional role, industry, relevant behaviors) and includes any inclusion/exclusion criteria applicable for recruiting.

Challenge: Determining the proper segmentation criteria to ensure a meaningful profile.

Prepare the interview guide

A structured script is created to guide the conversation. The guide includes mostly open-ended questions, organized in logical sections (introduction, exploration, deep dives, closing). While the conversation may deviate, having a guide ensures consistency across interviews.

Challenge: Avoiding suggestive questions and leaving space for spontaneous exploration.

Transcribe the interview

Once the interview (usually conducted via a call) is complete, the next step is to convert the audio into text.

Challenge: When done manually, this step is tedious and time-consuming.

Extract insights and build the empathy map

With the transcription in hand, it’s time to analyze and synthesize the content. This begins with identifying key insights, including needs, frustrations, expectations, and behaviors. These are then reorganized into a visual framework—typically an empathy map—that breaks down the user experience into four quadrants: what the user thinks, feels, says, and does.

Challenge: This task requires focus and synthesis skills. It involves condensing a large volume of text into a visual summary. The risk is getting lost in minor details or producing overly generic representations.

Identify cross-cutting patterns

After creating an empathy map for each participant, the final step is to analyze the material comparatively. Look for recurring themes, significant differences, and emerging trends.

Challenge: Striking a balance between fidelity to the data and functional abstraction. Analytical skill is needed to distinguish real patterns from isolated coincidences and to determine what is relevant for product decisions.

Phase 2. Task evaluation

Let’s now move on to Phase 2 and evaluate each step.

Defining the Target Persona

This step never follows a fixed script. Each research project stems from different needs, poses different questions, and targets heterogeneous profiles. There is no universal procedure: sometimes it starts from business objectives, other times from hypotheses to validate, or from known users to explore in more depth.

Defining a user persona is a deeply qualitative task that requires intuition, abstraction, and judgment. In such cases, AI serves as a thinking partner, placing this task firmly in the Brainstorming with AI quadrant: describe the context, ask open-ended questions, gather suggestions, explore alternatives, and refine.

Preparing the Interview Guide

The guide must also be tailored each time: objectives shift, topics evolve, and what works in one interview may be counterproductive in another.

Here again, AI is valuable as a creative partner: it can help generate initial drafts, suggest phrasings, and flag leading questions. But you’re in charge—deciding what to keep, revise, or discard. This, too, belongs in Brainstorming with AI.

Transcribing the Interview

Interview transcription is a repetitive, standardized, low-value task. An automated tool performs it better, faster, and without distractions.

No prompt or interaction is required: set it once and let it run. This is the archetype of an AI Tool-Based Automation strategy.

Extracting Insights and Building the Empathy Map

Once the interview is transcribed, the next step is to interpret the content. This process requires focus, synthesis, and the ability to recognize patterns. Crucially, however, its structure is repeatable: while the insights vary, the way you collect, organize, and present them tends to follow a familiar pattern.

That’s why the most effective strategy is the AI Assistant: a clear, formalized delegation that instructs the AI on what to look for and how to format the output. Once the framework is defined (e.g., pain points, motivations, workarounds), the AI can deliver a solid first synthesis for you to review and refine.

Identifying Patterns Across Empathy Maps

Following individual analysis, it’s time to take a broader view: what emerges from comparing all the interviews? What are the recurring themes, shared frustrations, and everyday needs?

Here too, we face a high cognitive load but a stable structure: the expected output (a set of organized, relevant patterns) can be clearly defined, and AI can provide adequate support. The strategy is the AI Assistant: apply the appropriate prompt across multiple empathy maps, and ask for syntheses, clusters, and thematic groupings. The human’s role remains to validate, refine, and interpret.

In summary, we are dealing with a process in which the collaboration strategy between humans and artificial intelligence can be outlined as follows:

The initial steps, which are more exploratory in nature, are best suited to open-ended interactions with AI. The central and final steps, which are more structured, benefit from codified prompts. One step—transcription—is so operational that it can be entirely handled by a tool without human intervention.

The benefit is not just the time saved (which is substantial), but also the optimization of mental energy. By automating repetitive tasks and intelligently delegating those that require cognitive effort, you free yourself to focus on what truly matters: strategic decisions, the quality of synthesis, and the product’s overall impact.

Download the PDF version of the AI Collaboration Canvas. I invite you to use it and share your thoughts with me. Was it easy to use? Were you able to map out a process and define an effective strategy for collaborating with artificial intelligence?