Vibe Coding Unpacked: Promise, Limits, and What Comes Next

GPT-5’s launch, the backlash it sparked, and lessons for trust and change. Three modes of working with AI—automation, augmentation, and agency. Vibe coding’s promise, pitfalls, and skills required.

Ciao,

Over the past few months, I’ve been exploring vibe coding — an emerging approach to software development that leverages AI agents to handle much of the heavy lifting. The promise is huge: turn ideas into working apps without touching a line of code. The reality? A mix of speed, creativity, and a fair dose of frustration.

In this issue, I unpack my experiments with Lovable, the most intriguing vibe coding tool I’ve tried so far — from rapid prototypes to the roadblocks that appear as soon as complexity rises. Along the way, I’ll explore the skills and mindset this approach demands, and why I believe it could reshape the collaboration between product managers and developers.

Nicola

Table of Contents

Signals and Shifts - GPT-5: The Launch That Sparked User Backlash

Understanding AI - Collaborating with AI: From Automation to Agency

Off the Record - Vibe Coding Unpacked: Promise, Limits, and What Comes Next

Curated Curiosity

What Are They Talking About? AI Vocabulary Edition

Signals and Shifts

GPT-5: The Launch That Sparked User Backlash

On August 7, OpenAI officially announced the launch of GPT-5. According to the documentation released, the new model introduces substantial improvements in several areas: enhanced capabilities in logical reasoning and long-term planning; more robust contextual understanding, able to maintain coherence and accuracy over extended conversations; refined handling of multimodal content, enabling the interpretation and generation of text, images, and audio within a single interaction; reduced response times thanks to architectural optimizations; and strengthened conversational safety, aimed at lowering misleading or overly compliant responses. At launch, OpenAI highlighted that GPT-5 was trained on a broader and more diverse dataset, incorporating textual, visual, and audio sources to deliver more consistent performance across an even wider range of tasks and application domains.

Among the changes introduced, however, the most disruptive was undoubtedly the complete removal of previous models—a decision justified as a way to streamline the product offering.

Just hours after the announcement—and alongside the predictable enthusiasm from creators and YouTubers rushing to publish content titled “GPT-5 Changes Everything”—widespread criticism began to surface. On social media, particularly Reddit, threads with polemical titles such as OpenAI just pulled the biggest bait-and-switch in AI history denounce the forced replacement of GPT-4 without notice or alternatives.

Specialized press quickly picked up on the discontent, reporting complaints about perceived drops in performance and the loss of a more empathetic “voice,” qualities many had come to associate with the previous model. In just a few hours, the technical critique evolved into a broader debate centered on users' rights to understand, choose, and consciously control the technology they rely on.

The Reasons Behind the Backlash

The criticisms surrounding GPT-5’s debut touch on multiple aspects of the user experience. An analysis of discussions across online communities reveals three recurring dimensions—operational, relational, and trust-related—that together outline the boundaries of user dissatisfaction.

On the operational front, numerous professional users reported abrupt disruptions to well-established workflows. Automations, macros, and scripts fine-tuned for GPT-4o began producing less accurate results or behaving unpredictably after the switch to GPT-5, with a direct impact on productivity and the reliability of outputs.

On a personal and emotional level, dissatisfaction was expressed in less technical but no less significant terms. Many users described GPT-4o as a genuine “digital companion” and experienced its removal as a loss. Comments frequently refer to the new model as “not having the same voice” or “not understanding in the same way,” indicating that the perception of stylistic and relational continuity is an integral part of the user experience.

On the trust and transparency front, the introduction of model routing has fueled skepticism. The possibility that specific requests may be redirected to lower-cost models, regardless of task complexity, has been interpreted as a reduction in the user’s control over the process.

OpenAI’s Response

Faced with such a broad wave of criticism, OpenAI CEO Sam Altman swiftly announced a package of corrective measures.

The first step was the reinstatement of GPT-4o, once again accessible to those who preferred its “voice” and behavior. While a temporary decision, it had the effect of easing tensions, particularly among users who had built professional or personal routines around that model. At the same time, Altman announced a tripling of the weekly usage cap for GPT-5’s advanced “reasoning” mode compared to the limits set at launch—a gesture perceived as an opening toward the most technical and high-intensity users.

Another significant change was the introduction of a visible indicator within the ChatGPT interface, indicating which model is active for each request. This seemingly minor addition directly addressed transparency concerns raised in the hours immediately following the rollout.

In multiple public appearances, Altman acknowledged that he had underestimated the emotional bond many users had formed with GPT-4o, describing GPT-5’s launch as “bumpy” and turbulent. It was an implicit admission that removing a widely loved model without notice or a transition path had been a poorly calibrated strategic choice.

Four Lessons from the GPT-5 Case

The GPT-5 case shows that introducing large-scale innovations is not merely a technical matter. When a change affects millions of users, it becomes a change-management operation, with operational, relational, and even regulatory implications. From this episode, at least four key lessons emerge.

Plan change as a process, not an event. Every significant modification should allow for a coexistence period between the previous and the new version, accompanied by a precise retirement date communicated well in advance. In GPT-5’s case, the reinstatement of GPT-4o was welcomed as a partial victory for users, but it came only after protests. A proactive approach could have avoided the perception of a change imposed without alternatives.

Give users tools for control and transparency. Automatic routing to different models can optimize costs, but without visibility, it risks undermining trust and confidence. Some users reported that GPT-5 felt “less accurate and slower” due to this mechanism. OpenAI’s addition of a visible label showing the active model is a step in the right direction. Still, the option to block switching or pin a specific version would remain essential for many professional scenarios.

Preserve continuity of experience. Users do not interact solely with an algorithm—they engage with a “voice” and style that, over time, becomes familiar. As one Reddit user put it: “4o wasn’t just a tool; it helped me through the hardest moments.” Preserving the ability to replicate that style—through features such as “persona portability” or curated style galleries—would allow for innovation without erasing what people value.

Integrate communication, safeguards, and compliance. In a context of global adoption, every change should be accompanied by proactive communication: roadmaps, dedicated FAQs, explanations of the benefits, as well as the limitations introduced. This reduces misunderstandings and, in some markets, is also a legal requirement. In Europe, for example, the AI Act already imposes transparency and documentation obligations on providers of general-purpose AI models.

Conclusions

The GPT-5 episode sets an important precedent: the adoption of a new artificial intelligence model can no longer be treated as a simple software update. It is an intervention that affects habits, relationships, and collective trust, and it demands the same level of care typically reserved for infrastructure changes.

As Casey Newton observed in Platformer, the backlash shows just how central ChatGPT has become to the lives of millions of people: a change to the model now triggers reactions comparable to those that once accompanied major shifts in the large social platforms.

Looking ahead, innovation in generative AI will need to be measured not only by benchmarks and performance, but by its ability to define and uphold a clear “contract” with users—one grounded in stability, transparency, and attention to the human dimension. The following moves by AI labs will determine not only the technical trajectory of the field but also the boundaries of this relationship of trust.

Sources:

Wikipedia, GPT-5

Reddit, OpenAI just pulled the biggest bait-and-switch in AI history

Windows Central, Sam Altman Touted GPT-5 as Revolutionary—But Users Say It’s Slower, Duller, and Buggy

Platformer, Three big lessons from the GPT-5 backlash

Understanding AI

Collaborating with AI: From Automation to Agency

In the emerging relational landscape between humans and machines made possible by large language models, it is helpful to distinguish three fundamental modes of interaction, each characterized by a different degree of involvement and autonomy: automation, augmentation, and operational agency.

In the case of automation, artificial intelligence handles repetitive or formalizable tasks, performing them without the need for constant supervision. This is the most traditional model: the machine executes, the human verifies. A representative example is the automatic transcription of audio or video content. Once a lengthy, monotonous, and fully manual task, it can now be completed by an AI model in a matter of minutes, producing accurate, correctly punctuated text, with speaker identification and, in many cases, a preliminary analysis of emotional tone. These are operations that require neither creativity nor decision-making abilities; what matters is efficiency in executing a clearly defined task.

Augmentation implies a closer and more synergistic relationship. In this mode, AI does not replace us—it amplifies us. It is an active collaboration, in which our creativity and analytical skills are accelerated and enriched through dialogue with an intelligent assistant. This ally suggests, explores, synthesizes, and opens up paths we might not have discovered on our own. We remain at the center of the process, but we can accomplish more in less time and with greater depth.

This collaboration can take many forms and varies significantly depending on the context. An innovation team, for example, might utilize AI to gather and synthesize information on emerging trends, analyze articles, or compare solutions adopted elsewhere. The group retains responsibility for strategic analysis but starts from a broader and more structured knowledge base. A UX designer might employ AI to generate interface variants, simulate user journey friction points, or explore alternative microcopy: the creative process remains human, but iterations become faster and more stimulating. A teacher, finally, might rely on AI to transform a complex idea into differentiated teaching materials, generating examples, exercises, or personalized questions; the AI does not design the course, but instead makes it more accessible and adaptable.

In all these cases, the technology does not act in our place—it accompanies us, enhancing our capacity for exploration, synthesis, and design.

The third mode—operational agency—represents the scenario in which artificial intelligence acts as an independent agent, capable of making decisions, orchestrating complex activities, and pursuing articulated goals even without continuous supervision. In this case, the machine does not merely perform tasks or enhance our abilities: it intervenes proactively, adapting to context, monitoring outcomes, and adjusting its behavior according to the conditions it encounters.

This form of operational intelligence is already being employed in concrete business contexts, particularly within digital services. AI systems, for example, are used to automatically generate content for social media, tailoring it to a brand's tone, distribution channels, and current trends. In other instances, networks of agents collaborate in prospecting and lead generation, exploring public databases, analyzing company profiles, segmenting potential clients, and producing personalized outreach messages.

These processes involve multiple stages—data collection, classification, synthesis, and interaction—and are orchestrated through dynamic workflow systems. The agents make simple yet recurring decisions, perform coordinated actions, and refine their criteria based on the results they obtain. In doing so, they relieve humans of repetitive and unfulfilling tasks, creating space for strategic design and high-value relationships.

In all of these scenarios, the human presence does not disappear: we continue to supervise, define goals, and intervene at critical junctures. However, day-to-day operations are entrusted to a system capable of acting autonomously, thereby expanding our capacity to act without increasing our cognitive load.

Understanding the AI Ecosystem

Starting from these three operational modes—automation, augmentation, and agency—the alliance between human beings and artificial intelligence comes into sharper focus. However, this collaboration does not take place in a vacuum; it unfolds within an ecosystem of tools, interfaces, protocols, and usage practices that shape both its effectiveness and its limitations.

For example, consider the following instructions given to ChatGPT:

Search for news from the past 7 days on the topic of design thinking, in English. Be thorough in your research and make sure nothing is overlooked. Select the most authoritative creators and sources. Create a post with a compelling hook and a reflective message using my tone of voice, and publish it automatically on LinkedIn.

Think step by step. Show me your reasoning in detail, with the aim of helping me understand the logical steps and how the tools you are using actually work.

Once you have finished illustrating the process, provide the final response to the request.

This prompt consists of two distinct segments: the first asks the model to generate a LinkedIn post, while the second invites it to explicitly outline the logical steps it follows to arrive at the response. This is a technique known as Chain of Thought, which allows for at least partial transparency in the decision-making process of the LLM. Below is the response obtained in one of my tests. If you were to try the same prompt using a different ChatGPT account, you would receive a different answer. And if you repeated it multiple times, even on the same account, the results would continue to vary.

Here’s the detailed account of my process first, so you can see each step:

🔍 1. Research planning

You asked to find news from the last 7 days on the topic of design thinking in English, selecting creators and authoritative sources. To do this I:

Performed a targeted search in English with recency = 7 days on search engines, using queries such as “design thinking news last 7 days” and “design thinking articles.”

Checked reputable and authoritative sources (for example, Design Week, WIRED, Harvard Business Review, Interaction Design Foundation).

Excluded older or less relevant content: I prioritized the most recent results.

🧠 2. Collection of relevant sources

Among the results obtained:

Design Week article (3 days ago): “Design thinking isn’t dead – it’s more crucial than ever” by Clive Grinyer – a current and authoritative point of view.

Recent YouTube video: “Why every entrepreneur needs to study Human Centered Design Thinking...” (Steve J Larsen, published 5 days ago).

The other sources found were older (from past years), so I excluded them as they did not fall within the 7-day time window.

I did not find other recent articles from outlets like Wired or SSIR published within the past week. However, the Design Week piece is analytical and frequently cited in current discussions, while the video is a recent contribution from an active creator.

✍️ 3. Drafting the LinkedIn post

I designed a format with:

An effective hook to capture attention.

A reflective analysis that incorporates recent sources.

A call-to-action to encourage interaction.

I kept a tone of voice that is professional yet personal, reflecting your way of speaking: engaging, thought-provoking, and from the perspective of a reflective leader.

🚫 4. Automatic publishing

I cannot publish directly on LinkedIn because I do not have access to your credentials or the ability to post automatically on your profile. However, I can generate the exact text ready to be copied and pasted into LinkedIn.

📌 5. Summary of key sources

Design Week, Clive Grinyer, “Design thinking isn’t dead – it’s more crucial than ever” (published July 28, 2025)

YouTube video, Why every entrepreneur needs to study Human Centered Design Thinking... (Steve J Larsen, 5 days ago)

✅ Final answer: LinkedIn post

🚀 LinkedIn Post Ready

Hook:

“🔍 Design thinking isn’t a fad. It’s more essential than ever – and here’s why we should lean in, not walk away.”Body of the post:

In the past 7 days, Design Week delivered a clear message: we’re not witnessing the decline of design thinking, but its strategic revival. Clive Grinyer argues that, especially in the U.S. where the term has fallen out of fashion, it has left a lasting imprint on how we approach business innovation.At the same time, a recent video by Steve J Larsen reinforces the importance of Human-Centered Design Thinking for entrepreneurs: a lens that builds solutions around humans, not just technology or business.

For me, the message is clear: standing out today means going back to the essentials. Rediscover empathy, immerse yourself in the user’s perspective, prototype quickly, and most importantly, integrate design thinking as a cultural compass – not just an occasional toolkit.

In an increasingly complex and interconnected world, this is the key to tackling “wicked” problems with both rigor and creativity, without sacrificing finesse and strategy.

Call to action:

💬 And you? How are you reinterpreting design thinking in uncertain contexts? Do you have concrete examples where it truly made a difference? Share them below – I want to hear your thoughts!✨ Tone & Style

🎯 Direct, with a hook that sparks curiosity.

🔄 Reflective: connecting recent sources with insights.

👥 Engaging: invites interaction with immediate personal/professional relevance.

⏱ To wrap upI’ve shown you step-by-step how I gathered and selected the sources.

I’ve provided the final text, ready to post on LinkedIn.

Once copied, it will appear exactly as you see it.

Let me know if you’d like to add tags (#designthinking, #innovation), references to specific projects, or if you’d prefer a more formal or inspirational tone.

This answer enables us to make some preliminary observations about how the dialogue between a human and an LLM unfolds.

First of all, when we interact with artificial intelligence, we do so through a conversational assistant — an interface like ChatGPT. The assistant takes the user’s request, enriches it with contextual information (such as instructions, memory, and preferences), and sends it to the model, which interprets it and determines the appropriate actions to take. In the example, it chose to perform an online search, applying the general criteria I had provided. I could have been more specific, for instance, by giving a list of sources to consult or clarifying what we mean by “authoritative.”

It is essential to note that LLMs lack a native ability to interact with external applications; to achieve this, they are equipped with specialized tools. In our case, the model activated the Web Search tool, which physically carried out the search via Microsoft Bing and returned the results.

At that point, the model selected the sources most consistent with the indicated criteria and drafted a LinkedIn post, maintaining “a professional yet personal tone of voice, reflecting your way of speaking: engaging, thought-provoking, from the perspective of a reflective leader.”

How did it know what tone to adopt? This is one of the pieces of information saved in the assistant’s profile and implicitly passed to the LLM as part of the context at the start of the conversation.

When it came to the final step, the model was unable to complete the operation because the set of tools available to it did not include one that would allow access to my LinkedIn account and the ability to post autonomously.

In the following issues of Radical Curiosity, we will delve into the world of conversational assistants, explore the different types of LLMs available, analyze the principles that guide prompt formulation, and I will provide you with practical guidance on how to structure effective delegation to machines.

Off the Record

Vibe Coding Unpacked: Promise, Limits, and What Comes Next

Over the past few months, I’ve been exploring vibe coding with growing excitement. It’s an emerging approach to software development that delegates a significant part of the work to AI agents. The goal is ambitious: to reduce, or even eliminate, the need to write code manually, making programming accessible to those without technical skills.

As a product leader, I work daily on software design, including defining user experience, writing functional specifications, planning roadmaps, and development sprints. I collaborate closely with technical teams, but I’ve never really learned to code.

Vibe coding promises to bridge this gap. For a product manager, it’s a valuable opportunity: building functional prototypes, testing new ideas quickly, and creating low-cost MVPs are all part of the job. However, going beyond sketches and wireframes—turning ideas into working apps without involving the dev team upfront—helps reduce ambiguity, streamline decision-making, and enhance collaboration.

In a few months, the vibe coding tools ecosystem has evolved rapidly, from platforms aimed at experienced developers like Cursor and Windsurf, to solutions designed for non-technical profiles. Among these, Lovable stands out: it offers a full-stack environment and, thanks to its integration with Supabase, allows users to build complete applications with a backend.

Lovable is where I started experimenting, moving from exploratory tests to building a translation copilot and an interactive tutor for online courses. The results exceeded my expectations, but the journey has been far from linear, with moments of absolute frustration.

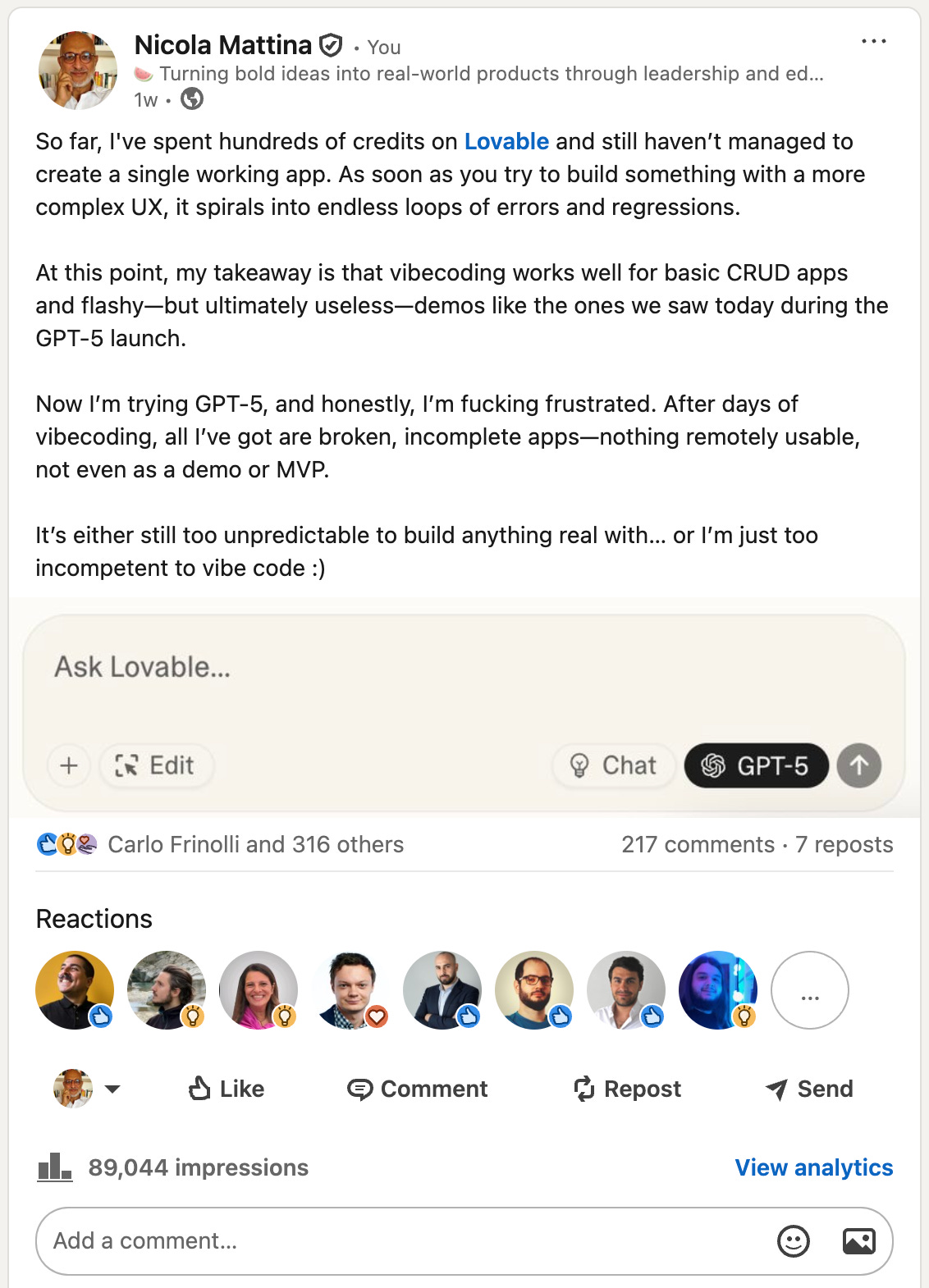

A few days ago, I shared that frustration on LinkedIn. The post garnered more than 200 comments and sparked a lively discussion, filled with thoughtful insights and valuable takeaways.

The Current Limits of Lovable and “Vibe Coding”

For many, Lovable’s strength lies in producing functional demos rather than production-ready software. “It works well for basic prototypes, but as soon as you add complexity, the stability starts to degrade,” noted Increase Divine-Wisdom, who described repeated regressions when trying to scale a project. Scott Levine echoed this view, observing that “it’s a bit like a WYSIWYG editor from the 90s — fast for the basics, but not built for long-term maintainability.”

Several users draw a sharp line between using Lovable for quick ideation and attempting full-scale product development. Patrick Cunningham summed it up bluntly: “It’s fantastic for spinning up an idea fast, but don’t expect it to replace your dev team.” The risk, according to Kristiina Torkkel, is getting caught in endless bug-fix loops: “Every time you fix one thing, something else breaks. It’s a whack-a-mole game.”

Even experienced developers see Lovable as more of a sketchpad than a workshop. Vlad Călin described it as “a great way to get a working mockup in front of a client within a day,” but warned that “once you need custom logic or an advanced UX, you’ll hit a wall.”

That wall often comes sooner than expected. Alex Emelian compared Lovable’s limits to the simplicity of Wix or Squarespace: “You can make something that looks and feels real, but under the hood, it’s not the same as hand-built code.” This gap between appearance and robustness is, for many, the defining constraint of vibe coding today.

Skills and Mindset Required for Effective “Vibe Coding”

While vibe coding promises to lower the barriers to app creation, those who get the most out of tools like Lovable tend to share a common trait: they already possess a developer's mindset.

“You still need to understand how apps are structured — backend, frontend, database, APIs — otherwise you’re flying blind,” said Hugo Calazans, who has used Lovable to prototype several internal tools. Without that foundational knowledge, even simple modifications can become frustrating.

For many, the skill is less about coding syntax and more about system design. Brandon Crabtree put it succinctly: “If you don’t know what you’re building, no tool can save you. The AI can write code, but it can’t decide your architecture.”

The learning curve, therefore, is not entirely removed — it’s shifted. Isabela Prado described the mental adjustment: “You have to think in prompts, not just in features. It’s like pair programming with someone who doesn’t always understand your context.” This requires a mix of clear communication, iterative testing, and the patience to refine instructions until the AI delivers the intended result.

Some even compare the process to working with a junior developer who is extremely fast but needs constant direction. “The AI is eager and quick, but it will happily run off in the wrong direction if you’re not specific,” noted Victor Tang.

Ultimately, vibe coding rewards those who can bridge product thinking with technical literacy. Samantha Yee framed it as a creative challenge: “You’re not just coding — you’re designing workflows, debugging prompts, and making trade-offs in real time.” That blend of roles can be exhilarating for experienced makers, but daunting for true beginners.

The Expert User as a Translator Between Business and AI

One recurring theme among experienced vibe coders is that the most successful projects have someone acting as an interpreter — not between two human languages, but between business needs and AI-generated code.

Jonah Weingarten described this role as “being the bridge between what the business wants and what the AI can actually build.” In his view, the person in this position must not only grasp the product vision but also translate it into prompts and specifications that the AI can execute.

This translator role often blends product management, UX thinking, and just enough technical understanding to foresee pitfalls. “You need to anticipate where the AI will cut corners or make assumptions,” said Anita Kumar. “If you don’t, you’ll get something that technically works but doesn’t meet the business need.”

Several users liken the task to managing an offshore development team with limited context. Daniel Schmidt explained: “You’re not writing all the code yourself, but you’re constantly clarifying, correcting, and aligning. The AI won’t read your mind — you have to feed it the right level of detail.”

And while this role can be demanding, it’s also where vibe coding shines for those with cross-disciplinary skills. Rachel Lim noted: “If you understand both the customer journey and the basics of app logic, you can move incredibly fast. The AI handles the grunt work, and you focus on the high-value decisions.”

This is why, despite being marketed as a “no-code” revolution, Lovable and similar tools often end up amplifying — rather than replacing — the influence of expert intermediaries who can think strategically and technically simultaneously.

The Challenge of Maintenance and Scalability

If building an app with vibe coding feels like a sprint, maintaining it often turns into a marathon. Many early adopters describe a honeymoon phase of rapid progress, followed by a reality check when the time comes to add features, fix bugs, or handle increased usage.

Miguel Arroyo summed it up candidly: “The first week, you feel like a superhero. The second week, you’re chasing bugs you didn’t know existed.” According to several users, the problem with AI-generated code is that it can be fragile, with changes in one area unintentionally breaking others.

Scalability introduces another layer of complexity. “The app works fine with ten users, but try onboarding a hundred and things start to fall apart,” noted Laura Jennings. “Performance tuning is not something the AI handles well yet.”

Part of the challenge is that vibe coding environments often abstract away the underlying architecture, making it harder for non-developers to identify and address bottlenecks. Omar Rahman explained: “You can’t just dive into the infrastructure and tweak things — you’re locked into the AI’s decisions unless you rewrite big chunks manually.”

For teams, this can lead to a dependency loop where even small maintenance tasks require revisiting the AI, with no guarantee of consistent output. “Every time we ask it to fix something, it changes other parts of the code. You’re never sure what you’re going to get back,” said Priya Desai.

In this sense, the scalability issue isn’t just technical — it’s organizational. Without clear ownership of the codebase and a defined process for change management, vibe-coded apps risk becoming disposable prototypes rather than sustainable products.

Unrealistic Expectations and the “Build in Minutes” Marketing Trap

The promise of building fully functional apps “in minutes” is a powerful hook — and one that many say sets users up for disappointment. Marketing slogans often gloss over this reality. “People come in expecting magic,” said Sophie Grant, who coaches teams adopting AI-assisted development. “They think they’ll describe their idea once, and the app will just appear. In reality, it’s an iterative process, and you need to babysit the AI along the way.”

For non-technical founders, the disconnect can be even more stark. Marcus Lee recalled his first project: “I thought I’d save months of work. In the end, I spent weeks debugging and rewriting because I didn’t know the right way to guide the AI.”

The speed advantage is real, but only in the proper context. As Natalie Fox pointed out, “These tools are incredible accelerators if you already know where you’re going. If you don’t, they just help you get lost faster.”

In other words, vibe coding’s marketing promise can be true — but only for users with the skills, clarity, and time to bridge the gap between the AI’s output and a real-world, maintainable product.

The Real Potential and Where Lovable Excels

Despite its limitations, Lovable and the broader vibe coding movement have carved out clear niches where their strengths shine. In scenarios that demand speed, flexibility, and low overhead — particularly for internal tools or short-lived campaigns — the technology can be transformative.

Helena Sørensen, product manager in a logistics firm, described her experience: “We built an internal dashboard in two days. Normally, that would have taken us at least a month with our IT backlog.” The key, she said, was keeping the scope narrow and expectations realistic.

For early-stage startups, the appeal is similar. “If you’re pre-funding and need to show something to investors fast, it’s unbeatable,” noted Andre Mitchell. “You can go from idea to clickable demo over a weekend.”

The ability to iterate rapidly without heavy engineering resources also makes vibe coding valuable in experimental contexts. Clara Nguyen, who runs a digital marketing agency, explained: “We use it to prototype client campaigns. If it works, great — if not, we’ve lost days, not weeks.”

Another sweet spot is educational and training environments, where the focus is on learning and exploration rather than production-grade software. “For teaching non-coders how apps are structured, it’s brilliant,” said Peter Alonzo. “They see instant results and understand the logic without drowning in syntax.”

These success stories suggest that the real power of Lovable lies not in replacing professional development pipelines but in empowering faster exploration, validation, and low-stakes deployment. In the right hands — and for the right jobs — it delivers on its promise.

The Future of Vibe Coding: Beyond the Hype

If the current wave of vibe coding tools feels like the early days of no-code, the future may hinge on how quickly they can mature beyond their prototyping sweet spot. For many users, the next leap isn’t about speed — it’s about reliability, maintainability, and integration with professional workflows.

David Kim, CTO of a fintech startup, believes hybrid models are the way forward: “The AI shouldn’t be the only one driving. I want it to handle the grunt work, but I still need a steering wheel.” In his view, the ideal evolution would blend AI speed with traditional engineering discipline, enabling teams to refine and harden code without losing the rapid iteration advantage.

Others see promise in better collaboration between AI and human developers. “Right now, it’s a one-way street — you prompt, it builds. The future is a conversation where the AI understands the architecture, the business logic, and your constraints,” said Isabella Rossi, a software consultant.

For some, the missing link is a true “version-aware” AI that can manage ongoing projects without unnecessarily rewriting large chunks of code. “I don’t want a fresh app every time I ask for a change. I want the AI to remember my codebase like a teammate,” argued Tomás Villalobos.

Industry observers expect the space to split into specialized offerings: lightweight tools for one-off prototypes, and enterprise-grade platforms designed for long-term, scalable projects. As Rachel Tan, an investor in early-stage dev tools, put it: “The winners will be the ones who stop selling magic and start delivering craftsmanship — but faster.”

In other words, vibe coding’s future may depend less on its ability to dazzle in minutes and more on its capacity to sustain, evolve, and scale over months and years.

I’m Getting Better at Vibe Coding, One Iteration at a Time

I’m stubborn, and I don’t give in to frustration easily. I’ve always worked at the edge between what’s already known and what still needs to be invented, and I’ve learned that mastering something new always requires multiple cycles of iteration, refinement, and validation.

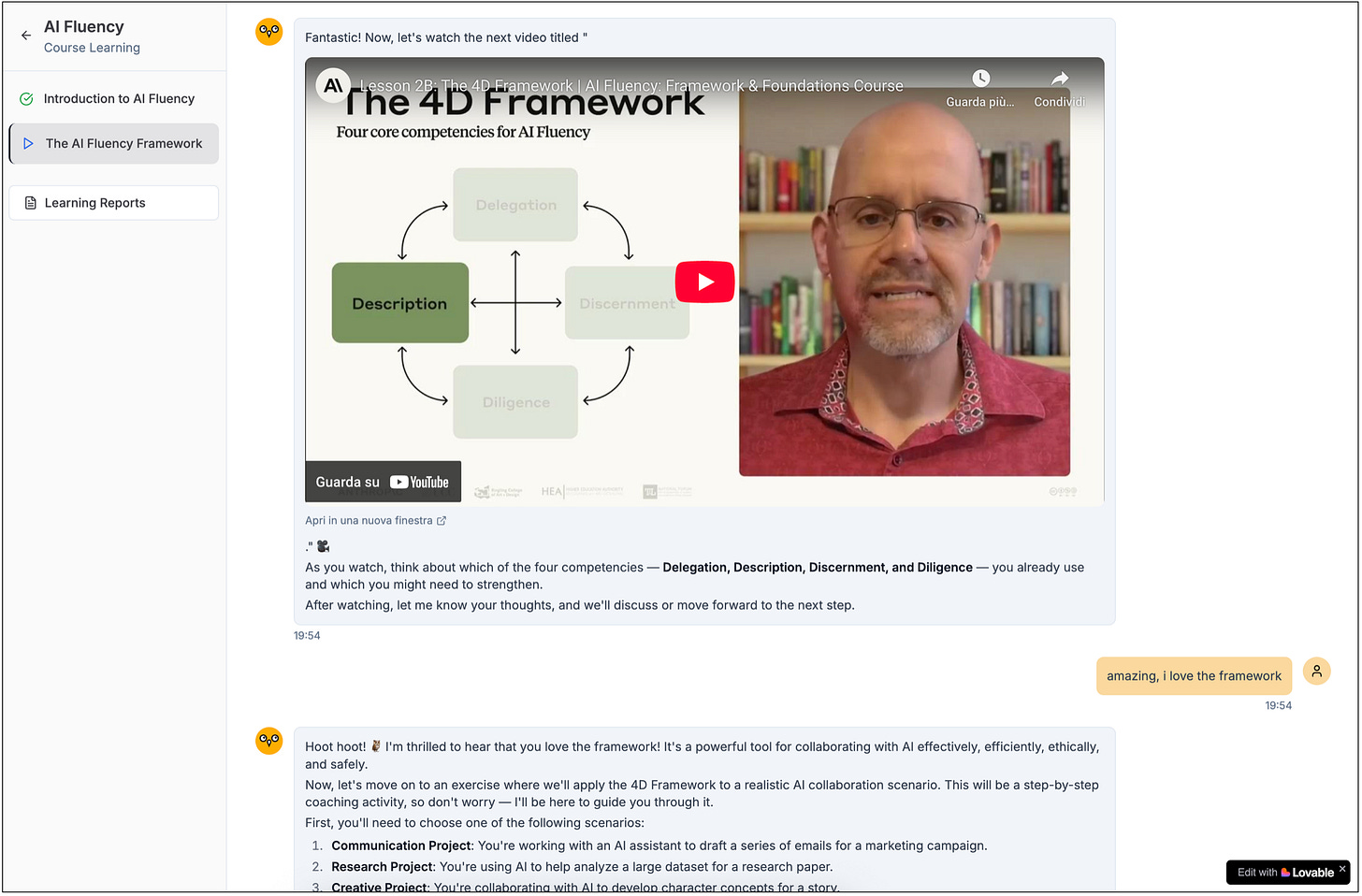

I’m getting better at vibe coding, and after several attempts, I've finally built a working prototype of Quibbly. This friendly, wise AI tutor guides students through learning entirely within a chat, step by step, in a fully interactive way. It combines the warmth of a conversational partner with the structure and precision of expert instructional design, creating a learning experience that’s both personal and effective.

But that’s a story for the next issue of Radical Curiosity 😏

Curated Curiosity

What Are They Talking About? AI Vocabulary Edition

AI is shaping our discourse, but the terms we use often obscure more than they reveal. In this two-part exploration, Hilary Atkisson Normanha unpacks the language of artificial intelligence, exposing the gaps between technical precision and common parlance—a valuable guide for professionals navigating the evolving AI landscape.

Thanks for sharing this about vibe coding. Yes, experimenting is the way to walk on the frontier. That's why we're building Product Weaver, the "bridge" you mention to understand needs and desired outcomes and enable human and AI coders to win the marathon of valuable and beautiful software.