Prompt. Chain. Build. Lessons from Serena and the frontlines of generative AI

A hands-on look at how prompt chaining improves AI output—and how we’re applying it to build Serena, our generative course design tool. Plus: how we’re coding Serena with generative AI.

Ciao,

In this edition of Radical Curiosity, I’m diving into the mechanics of prompt chaining—one of the most valuable techniques for working with large language models. I’ll walk you through how breaking a big task into smaller prompts changed the way I use AI to design structured outputs, like a course syllabus.

You’ll also get a behind-the-scenes look at how we’re building Serena, not just with generative AI as a content partner, but as a coding companion—warts, hallucinations, and all. From choosing the right stack to debugging alongside LLMs, it’s a real-time exploration of what it means to build a product with AI in the loop.

Table of Contents

Prompt Chaining with ChatGPT: how to break down complex tasks into simple steps

From Prototype to Product: how we’re building Serena with Generative AI

Anthropic Education Report: how university students use Claude

Book. Galit Atlas, Emotional Inheritance

Prompt Chaining with ChatGPT: how to break bown complex tasks into simple steps

When I first started using large language models to support my work, I quickly discovered that when you need to produce complex outputs, a single prompt is very often not enough. What you need is a chain of prompts—each focused on one task, feeding into the next. Thinking in chains, rather than crafting a single, clever prompt, became the turning point in how I approached LLMs for structured work like designing a course syllabus.

In this article, I’ll share what I learned while building a multi-step prompt workflow for Serena. If this is your first time hearing about the project, Serena is an AI-based platform I’m building in public to help people generate online courses. You can find the first episode of the saga here: Meet Serena. From idea to syllabus in minutes.

From Complexity to Clarity: Why Prompt Chaining Works

Trying to solve a complex task with a single prompt is like asking someone to plan a wedding, write the vows, book the venue, and bake the cake—all at once. Prompt chaining solves this by breaking big tasks into simple, logical steps, where each prompt has a single job. That leads to:

Better outputs: the model is solving smaller, clearer problems.

More control: you can tweak parts without breaking the whole.

Easier debugging: you know exactly where things went wrong.

Reusable components: each prompt can be applied elsewhere.

I write prompts using the technique described in this article: The Art of AI Prompting: Refining Instructions for Precision and Control. When working with prompt chains, I follow three key rules:

Start with the outcome and reverse-engineer the steps. Ask yourself: What do I ultimately need the AI to produce? Then work backward.

Give each prompt a single purpose. If your prompt is doing more than one thing—e.g., summarizing and evaluating and reformatting—split it. You’ll get better results, and you’ll understand what went wrong when something breaks.

Use structured output formats. I always specify output formats using markdown, YAML-style headers, or JSON. This helps me: reuse the output as clean input for the next step, scan and debug results quickly, and avoid “creative” formatting.

The Case: Building a Course Syllabus with a Prompt Chain

I’m sharing a simplified version of a prompt chain I designed to help users generate a course using Serena. If you’re new to instructional design, it’s essential to understand that the course creation process typically starts by defining three key elements:

The learners – Who they are, including their background, needs, and context.

The desired outcomes – What they should know and be able to do by the end of the course.

Their starting point – What they currently know and can do.

Designing a strong learning experience means identifying the gap between the learners’ current state and the desired outcomes, and then building a path to bridge that gap.

The goal of the prompt chain in this article is to help users, regardless of their instructional design experience, write a learner profile, conduct a gap analysis, and turn it into a clear, structured list of well-crafted learning objectives.

Here’s the flow I created:

Learner Profile. Turn a vague description into a structured profile with clear categories: background, demographics, motivations, and challenges.

Current Status. Translate partial survey responses and assumptions into a snapshot of what learners already know, do, and believe—across knowledge, skills, behaviors, tools, and mindset.

Desired Status. Expand the course’s intended transformation into observable learning outcomes, expressed again in the same five dimensions.

Gap Analysis. Identify what’s missing—what the course needs to bridge—without repeating what’s already known.

Learning Objectives. Generate SMART learning goals aligned with Bloom’s Taxonomy, based on the gaps.

The Chain in Action: How to Design (and Use) a Prompt Chain

Step 1: Learner Profile — Defining the Starting Point

For this first step in the chain, I needed the model to generate a structured learner profile from a vague or partial description. It might seem odd, but in practice, you’re often working with exactly that—an ambiguous and incomplete idea of who the learners are. So I designed a prompt with three goals:

Infer confidently when the input is clear.

Ask for clarification only when needed.

Output a compact, reusable structure optimized for downstream prompts.

You are an expert in instructional design and learner profiling. Your task is to transform a vague or partial user description into a compact, structured learner profile.

The output must be formatted in valid YAML and follow this structure exactly:

Professional_Background:

Industry:

Role:

Seniority:

Work_Context:

Demographics:

Age_Range:

Location:

Education_Level:

Motivations:

- [bullet point]

Challenges:

- [bullet point]

Guidelines:

- Detect the input language and respond in the same language.

- If the input is clear, infer confidently and complete the profile.

- If the input is ambiguous, ask one short clarification question.

- Use a neutral, compact tone.

- Ensure the output is optimized for use in LLM context blocks.

- Do not include any text outside the YAML block.

Input:

[Paste the user description here]And here’s a sample input I used to test it:

Digital Product Managers (mid to senior) working on B2B and B2C products, curious about AI but with little practical experience. They all speak English at least at B2 level.

The output was clear, compact, and—most importantly—usable by the next prompt in the chain:

Professional_Background:

Industry: Digital Products

Role: Product Manager

Seniority: Mid to Senior

Work_Context: B2B and B2C

Demographics:

Age_Range: 30-45

Location: Global

Education_Level: Bachelor's or higher

Motivations:

Stay competitive by exploring emerging AI tools

Improve product decision-making through AI-enhanced workflows

Bridge the knowledge gap between AI capabilities and product strategy

Challenges:

Limited hands-on experience with AI tools

Difficulty identifying practical applications of AI in their workflow

Overwhelmed by the fast pace of AI tool evolution

Step 2: Current Status — Understanding Where Learners Are Now

Once I had a structured learner profile, the next step was to determine what these learners already knew, could do, and believed about the course topic—specifically, AI and prompt engineering. So I created a prompt that translated this “current state” into five key dimensions: Knowledge, Skills, Behaviors, Tools, and Mindset.

You are an Instructional Designer working on a course tailored to the learner profile shown below. Your task is to define the Current State of learners as part of a 4-step gap analysis process.

Based on the learner profile and a vague description of their current state, infer and expand their Current State using these dimensions: Knowledge, Skills, Behaviors, Tools, Mindset.

Use YAML-style label headers, be concise, and do not repeat details already stated in the learner profile.The best way to understand your learners’ current knowledge of a topic is simple: ask them. And yes, you can ask ChatGPT to help you generate a questionnaire to guide this assessment. If you’re designing a tailored course for a company, this step is essential—it will directly impact the success of your training. If you’re creating a course for a platform like Udemy or a learning community, it’s just as important. In that case, try to identify a representative sample of your potential audience and run the assessment with them before moving forward.

For this example, I asked ChatGPT to generate a set of credible answers to simulate learner responses, which I then used as input for the prompt.

“I've studied the topic on my own and have a pretty solid general understanding.”

“I use these tools every day, either for work tasks or personal projects.”

“I’ve played around with them a few times—mostly just out of curiosity.”

“I work on integrating or developing them as part of professional products and solutions.”

And here’s how the model cleaned it up:

Knowledge:

Understand product lifecycle, customer needs, and business metrics

Basic awareness of AI trends, but limited understanding of technical concepts

Minimal exposure to concepts like prompt engineering or LLM capabilities

Skills:

Strong in prioritization, stakeholder communication, and product roadmapping

Weak in identifying use cases for AI within product processes

No practical experience integrating AI into product discovery or delivery

Behaviors:

Rely on traditional tools and methods for decision-making and research

Occasionally read about AI developments but rarely experiment with tools

Default to delegating technical AI tasks to engineers or data teams

Tools:

Proficient with PM tools like Jira, Confluence, Figma, and analytics platforms

Rarely use AI tools such as ChatGPT, Claude, or Midjourney

No systematic approach to testing or evaluating AI tools

Mindset:

Curious and open to learning but unsure where to start

Slightly skeptical about the immediate value of AI for product work

Fear of falling behind peers or competitors more advanced in AI adoption

Step 3: Desired Status — Defining the Learning Destination

Now that I had a clear snapshot of where my learners were starting from, it was time to define where they needed to go. For this step, I used the same five dimensions to structure the output: Knowledge, Skills, Behaviors, Tools, and Mindset.

I asked the model to imagine the transformation needed for learners to achieve a set of goals I described in plain language. In real life, clients often have only a vague idea of what learners should accomplish—something like, “I just want my product managers to be more productive and start using generative AI in their day-to-day work.” The prompt’s job is to turn that raw description into structured outcomes.

You are an Instructional Designer working on the third step of a 4-step gap analysis.Your task: Based on the learner profile, their current status, and a description of the desired outcome, expand and structure the Desired Status learners should achieve by the end of the course.

Use YAML-style headers (Knowledge, Skills, Behaviors, Tools, Mindset).

Be aspirational but realistic.

Focus on observable, measurable outcomes.

Avoid repeating profile or current status info.I used that input and deliberately wrote it in bad English:

At the end of the course the students will be able to create the prompts to streamline their product management activities. They will understand prompt engineering and the different techniques, like role based prompting and iterative prompting to create new prompts. The will do a series of exercise to create new prompts in the following areas:

- competitive analysis

- customer research

- product requirement document

- user stories

- prioritization

And here’s how the model cleaned it up:

Knowledge:

Understand core principles of prompt engineering and its relevance to product management

Recognize and differentiate between techniques such as role-based, iterative, and chain-of-thought prompting

Know when and how to apply prompting techniques to specific PM workflows (e.g., research, documentation, prioritization)

Skills:

Create effective, goal-oriented prompts to support tasks like competitive analysis and customer research

Use prompt iteration to refine outputs and align results with PM needs

Evaluate the quality and relevance of AI-generated outputs and adjust prompts accordingly

Integrate prompt engineering into writing PRDs, user stories, and backlog prioritization

Behaviors:

Regularly experiment with AI tools to improve efficiency in core PM tasks

Proactively test and adapt prompts to different contexts and product scenarios

Collaborate with team members using AI-assisted workflows

Tools:

Confident use of AI tools like ChatGPT or Claude as part of daily PM toolkit

Maintain a personal prompt library or workflow templates for repeated use

Document and share prompting best practices with peers or cross-functional teams

Mindset:

View prompt engineering as a valuable, ongoing skill for enhancing product decision-making

Embrace a test-and-learn approach to working with AI

Confident in navigating and adapting to evolving AI tools and methods

Step 4: Gap Analysis — Mapping the Delta That Drives the Design

With both the current status and the desired status clearly articulated, the next logical step is to identify what is missing. For this prompt, my goal was to automatically extract the actionable gaps between the learners’ current capabilities and their aspirational state. In other words: what do they not know, not do, not believe—yet?

You are an Instructional Designer completing the final step of a 4-step gap analysis.

Your task: Based on the structured Current Status and Desired Status, identify the key gaps the course must address. Focus on actionable differences.

Use YAML-style headers (Knowledge, Skills, Behaviors, Tools, Mindset).

Only include relevant gaps.

Don’t copy entire sections from earlier outputs.

Keep it tight.I provided the learner profile, current status, and desired status as input, and ChatGPT generated the following output:

Knowledge:

Limited understanding of prompt engineering principles

Unfamiliar with prompting techniques like role-based and iterative prompting

Lack of contextual knowledge on how prompting supports PM tasks

Skills:

Inability to craft effective prompts tailored to product management workflows

No structured approach to refining and evaluating prompts

Difficulty integrating AI outputs into standard PM documentation and decision-making

Behaviors:

Passive engagement with AI; lack of consistent experimentation

Rarely apply AI tools to real product scenarios

Limited collaboration around AI-assisted practices

Tools:

Underutilization of LLMs and AI assistants in daily product work

No established prompt libraries or reusable templates

Lack of familiarity with prompt testing and evaluation features

Mindset:

Uncertainty about the reliability and value of AI in PM

Hesitation to adopt AI as a core productivity tool

Need to build confidence in using AI iteratively and independently

Step 5: Learning Objectives — Turning Gaps into Goals

Now that the learning gaps are clear, the next step is to transform them into concrete, assessable learning objectives. This is where the chain shifts from diagnostic to generative. Up to this point, I’d been identifying and organizing information. Now, I needed to create something that would guide the course content, activities, and assessments.

My goal for this step was to generate SMART objectives (Specific, Measurable, Achievable, Relevant, and Time-bound) based on Bloom’s Taxonomy—so each goal would reflect not only what the learner needed to achieve, but also how deeply they needed to engage with the material.

Here’s a possible prompt:

You are an expert instructional designer. Your task is to generate SMART learning objectives aligned with Bloom’s Taxonomy, based on a given gap analysis.

Organize the output in YAML format using the following top-level categories: Knowledge Skills Behaviors Tools Mindset

Under each category, list individual learning objectives as bullet points.

Each learning objective must:

- Start with the appropriate [Bloom’s Taxonomy level] in square brackets.

- Focus on one observable and assessable outcome only.

⚠️ Do not combine multiple outcomes or actions in one statement.

Be specific, measurable, achievable, and relevant to the learner’s context and gap.

Exclude time constraints. These goals will later inform the course structure and schedule.

Use clear instructional language suitable for inclusion in a course syllabus.

Avoid vague phrasing (e.g., do not use "By the end of the course..." or similar expressions).These are the learning objectives generated as a result of the chain:

Knowledge:

[Understand] Describe the principles of prompt engineering in the context of product management.

[Recognize] Identify key prompting techniques such as role-based, iterative, and chain-of-thought prompting.

[Differentiate] Distinguish between appropriate use cases for various prompting strategies in product workflows.

Skills:

[Apply] Create effective prompts to support competitive analysis using AI tools.

[Apply] Generate prompts to conduct structured customer research with LLMs.

[Apply] Draft AI-assisted product requirement documents using prompt templates.

[Apply] Write user stories by formulating and refining relevant prompts.

[Apply] Create prioritization matrices or decisions using prompt-based workflows.

[Evaluate] Assess the quality of AI-generated outputs and revise prompts accordingly.

[Develop] Build a reusable set of prompts tailored to recurring product management tasks.

Behaviors:

[Demonstrate] Use AI tools consistently in real product scenarios through structured prompting exercises.

[Experiment] Test and adapt different prompting techniques to improve workflow efficiency.

[Engage] Collaborate with peers to refine and share prompt strategies for product use cases.

Tools:

[Utilize] Operate AI tools such as ChatGPT to complete defined product management tasks.

[Organize] Maintain a personal prompt library for frequent use cases in product workflows.

[Implement] Use built-in prompt testing and evaluation features to refine outputs.

Mindset:

[Adopt] Show a proactive attitude toward experimenting with AI to enhance decision-making.

[Value] Acknowledge the role of AI tools as part of the product manager's toolkit.

[Build] Demonstrate confidence in independently integrating AI solutions into daily workflows.

Once you have a complete list of learning objectives, you can begin organizing the course. For example, you might break the objectives down into smaller, more manageable parts, group them into meaningful instructional themes, or structure them according to specific design principles. But this is another — and believe me, a more complex — chain of prompts.

5. Conclusion: Don’t Just Prompt—Design

Working through this chain of prompts to design a course syllabus didn’t just change how I use large language models. It changed how I think about collaboration with AI.

What I learned is that good prompting isn’t about clever phrasing—it’s about clear thinking. It’s about knowing what you want, breaking it down, and giving the AI a fair shot at helping you get there. The more structured my thinking became, the more valuable and reliable the model became.

If you’re working with AI and find yourself hitting limits, I encourage you to do what I did: stop trying to force everything into one prompt. Instead, think in chains. Design each prompt like it’s part of a conversation—with a purpose, an input, and a clear output.

From Prototype to Product: how we’re building Serena with Generative AI

When we started working on Serena, we knew we had a significant technology risk to mitigate. Could generative AI be used to create a structured online course? And if so, how should the workflow be engineered to make that process reliable and repeatable? What happens when the course topic falls outside the domain of a general-purpose LLM like ChatGPT? How do we manage the underlying knowledge base, then? Like most early-stage projects, we began with a proof of concept. Quick, rough, and far from pretty. But it worked well enough to prove that the core idea could work.

So, we decided to build a more refined MVP with self-service onboarding. That’s when we hit the wall every founder knows: the gap between what you want to develop and what your resources allow. We mapped out the essential steps for designing a solid syllabus. To build that properly, I imagined the kind of agile team I'm used to managing: four or five developers, a dedicated designer, and maybe even a QA—a dream setup. In reality, I had a modest budget — enough for about 20 days from a front-end developer and a few days from a UX designer. Not enough to go from a scrappy prototype to a product that looked and felt polished.

Coding with Vibes (and Constraints)

“Vibe coding” — spinning up features quickly using AI tools and prompt-first thinking — is a seductive idea. Tools like Cursor and Replit are making it increasingly viable. But let’s be honest: we’re not there yet when it comes to full-stack complexity.

That said, the productivity gains are real — if you know how to work with the tools. That’s where Enzo Augieri came in. An old friend of mine and co-founder from my first startup in 1999, Enzo brings nearly four decades of coding experience and a relentless curiosity for new tools. He was the perfect partner to push Replit to its limits.

Two key lessons emerged as we built with generative AI: let it choose the tools, and don’t expect it to drive without you at the wheel.

LLMs are most fluent in the technologies they see most often. When we let the model pick the stack — in our case, React — things went smoothly. But when we tried switching to Vue and Quasar, which Enzo personally preferred, everything got harder: vague suggestions, hallucinated components, frustrating debugging. LLMs don’t optimize for elegance or preference — they follow the statistical path of least resistance. Fighting that current cost us time, so we leaned in.

Still, even with the “right” stack, the AI isn’t magic. When a basic logout feature broke, it looped through vague fixes. Replit’s debugger couldn’t help. It took Enzo digging into the browser console to find the real issue and guide the AI with the proper context to get it fixed. AI can accelerate development. But it needs an experienced hand to steer, debug, and decide when to trust — and when to override.

Redefining the Startup Org Chart

For startups, there’s a real opportunity on the table: increase development productivity, build and iterate faster, and test ideas more intelligently. With the proper setup, two experienced developers can accomplish what a typical agile team would. But don’t buy into the narrative that anyone can now build complex applications solo, just by prompting.

“Vibe coding” makes for great social media content, but real products — the kind that need to be secure, scalable, and maintainable — require more. If you’re building a business that’s meant to last, you need to own your tech stack. You need control over your core assets. And you need enough technical understanding — whether directly or through trusted collaborators — to make informed decisions.

That said, after this hands-on experience, I’m reevaluating Serena’s financial model — reducing headcount and integrating AI technologies, from tools to autonomous agents, directly into the org chart to streamline operations and keep the budget as lean as possible.

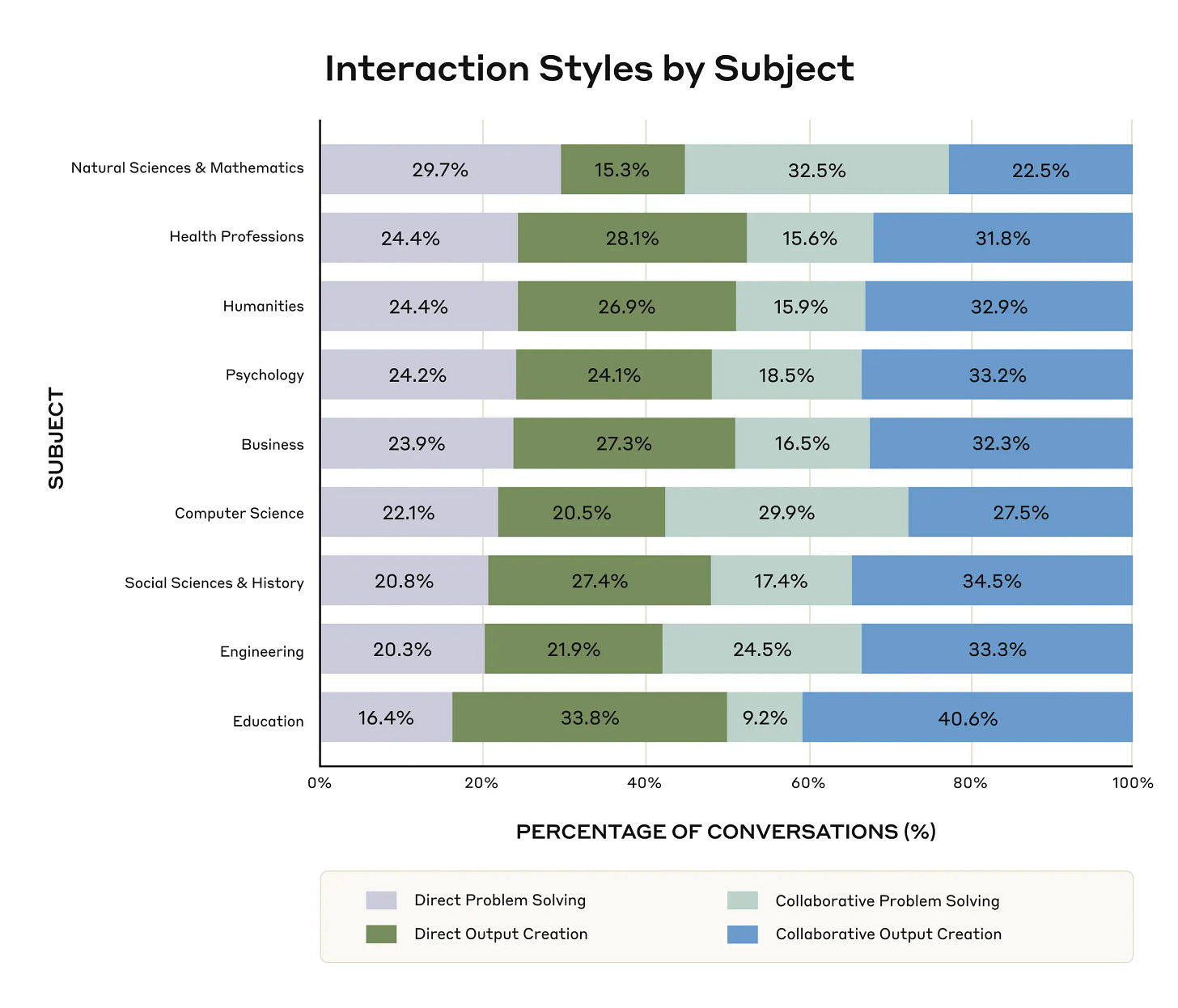

Anthropic Education Report: how university students use Claude

Anthropic released an interesting report based on in-depth interviews with students across the U.S.—and it’s full of insights for anyone working in education, EdTech, or AI. Some key takeaways:

Students are using AI strategically, not just to shortcut assignments.

Claude is often treated like a study partner or mentor—helping clarify complex ideas, test understanding, and spark deeper thinking.

Many students say using AI helps them feel more confident and capable, especially in independent learning.

The report also raises important questions for educators: how do we design learning experiences that embrace this new behavior instead of resisting it?

Galit Atlas, Emotional Inheritance

Emotional Inheritance is one of those books that stays with you long after you've turned the last page. I read it in just a couple of days, not because it was light, but because it was so deeply compelling I couldn’t put it down.

Galit Atlas writes with the clarity of a scientist and the soul of a storyteller. Through her own experiences and those of her patients, she explores how trauma, silence, and emotional legacies are passed through generations—sometimes unknowingly, but never without impact.

This book is as insightful as it is intimate. I can’t recommend it enough:

Buy the English edition: Emotional Inheritance (Amazon)

Buy the Italian edition: L’eredità emotiva (Amazon)

If you were forwarded this email or if you come from a social media, you can sign up to receive an article like this every Sunday.Thanks for reading this episode of my newsletter. I hope I’ve been helpful. If you think my sketchbook might interest someone else, I’d appreciate it if you shared it on social media and forwarded it to your friends and colleagues.

Nicola

Thanks Nicola, as always very insightful