The Cycle of Deliberate Collaboration with Artificial Intelligence

A practical model to help managers integrate AI into team processes — moving beyond prompts toward a deliberate framework of delegation, instruction, evaluation, and responsibility.

Ciao,

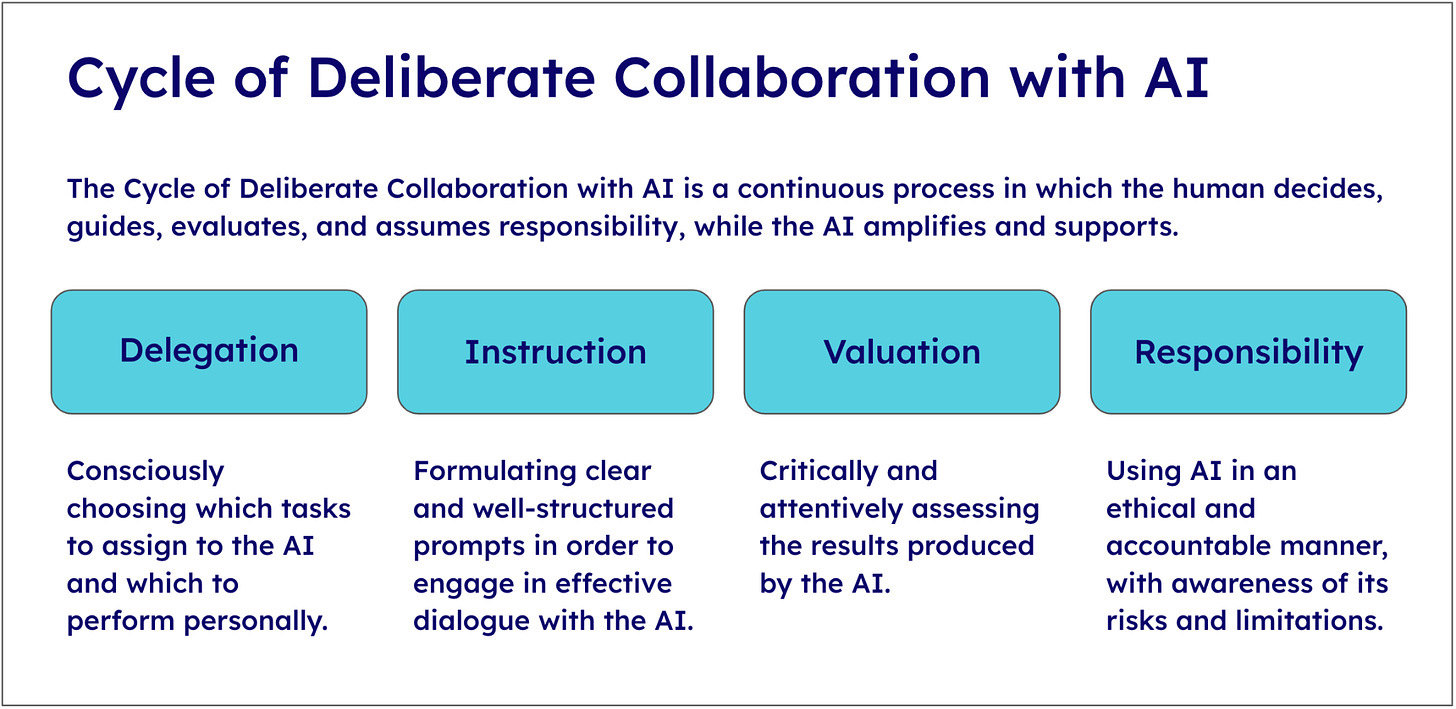

in this issue of Radical Curiosity, I introduce a model I have developed to help managers bring artificial intelligence into their team’s processes: the Cycle of Deliberate Collaboration with AI. The goal is to provide a clear framework for integrating AI in a deliberate way, balancing automation with human responsibility.

The model unfolds in four phases — delegation, instruction, evaluation, and responsibility — and is designed as a practical guide for those who want to turn AI into a true work ally rather than a source of complexity. It offers a path for managers to move beyond the technical layer of prompt engineering and embrace a structured, intentional approach to human–machine collaboration.

Nicola ❤️

Table of Contents

Understanding AI - The Cycle of Deliberate Collaboration with AI

Curated Curiosity

How Americans View AI and Its Impact on People and Society

Two Books, One Strategic Ecosystem

Understanding AI

The Cycle of Deliberate Collaboration with AI

Much of today’s public discussion around generative AI focuses on prompt engineering—the ability to craft effective commands that elicit high-quality responses from large language models. This is an extraordinarily reductive approach, as it treats AI as a mere engine: it receives a well-structured input and produces a predictable output, overlooking the broader dimension of collaboration between humans and machines.

A deliberate use of AI cannot be reduced to the art of the prompt, just as writing is not merely a matter of choosing the most effective words. It requires method, critical thinking, and—above all—a reflection on the role we intend to assign to technology within our processes.

To navigate this terrain, it is helpful to think of human–AI collaboration as a cycle articulated in four phases: delegation, instruction, evaluation, and responsibility. My model draws inspiration from the AI Fluency framework and the 4Ds—delegation, description, discernment, and diligence—proposed by Joseph Feller (University College Cork) and Rick Dakan (Ringling College).

The Four Steps of Collaboration

To transform artificial intelligence into an ally—rather than a mere provider of automated answers—it must be embedded within a structured cycle.

The four steps of the Cycle of Deliberate Collaboration with AI are as follows:

Delegation. The starting point lies in defining which tasks can be entrusted to the machine and which should remain the prerogative of humans. To delegate does not mean to abdicate, but rather to recognize that AI can perform certain functions more efficiently or at a larger scale, thereby freeing up resources for higher-value activities.

Instruction. Once the task has been identified, it must be translated into a precise assignment. The quality of the output largely depends on the clarity of the input: formulating goals, specifying constraints, and indicating context. This is the essence of prompt engineering.

Evaluation. No AI-generated output should be considered final without critical review. The system may produce errors, distortions, or plausible but unfounded responses. A moment of assessment is therefore essential, in which the human verifies coherence, reliability, and relevance to the intended objectives.

Responsibility. AI is not an autonomous agent, but a tool. Final decisions, operational choices, and their consequences always rest with the human. It is therefore necessary to consider legal and compliance constraints, take responsibility, and ensure transparency.

This cycle is an iterative process. Each loop allows for refining delegation, improving instruction, sharpening evaluation, and reinforcing responsibility. It is precisely through this continuous iteration that collaboration with AI becomes truly effective.

Designing Delegation

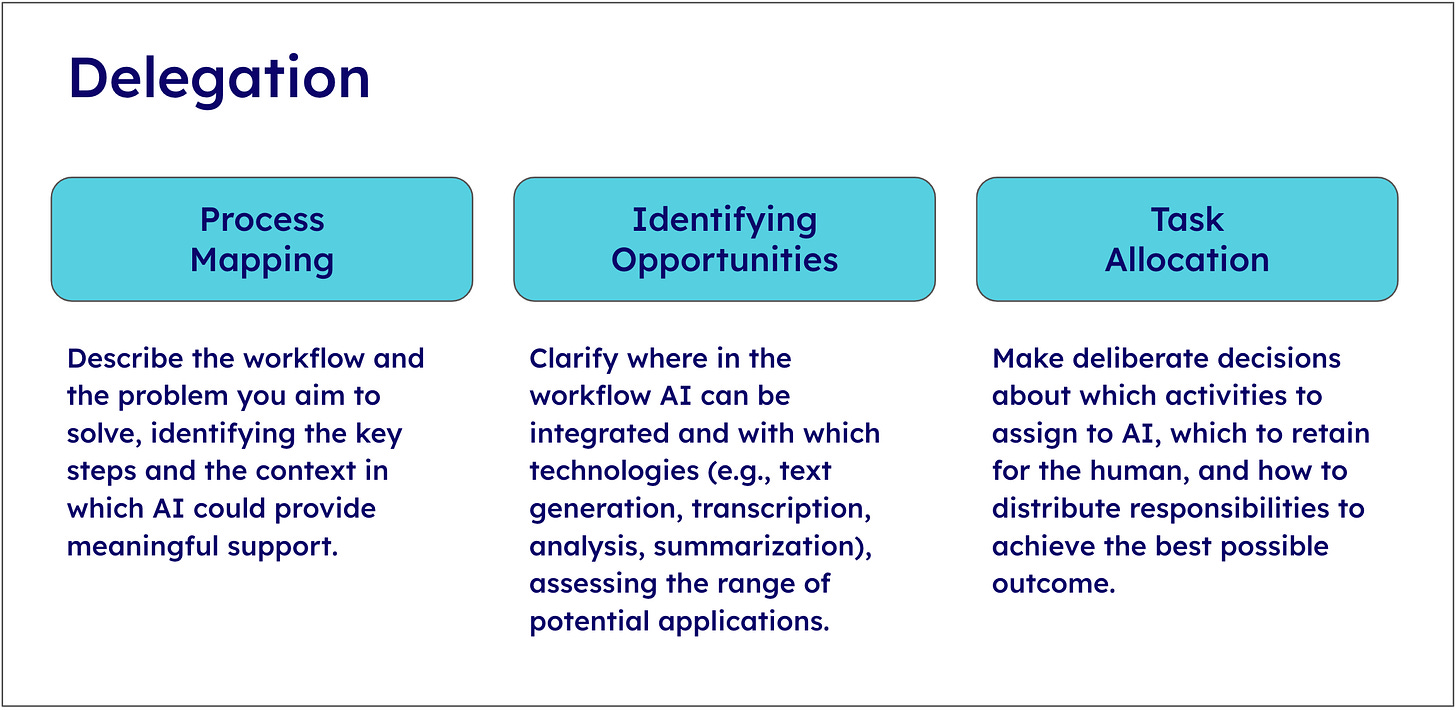

To delegate means acknowledging that not everything must be done by us, but also that not everything can be entrusted to the machine.

The delegation process unfolds in three steps. The first step is process mapping: observing how the workflow is structured, identifying the most time-consuming steps, the repetitive ones, or those where the computational breadth of AI can make a tangible difference. At the same time, it is crucial to isolate the stages where value depends on human sensitivity, contextual awareness, or judgment.

Next comes the identification of opportunities. Not all activities offer the same potential for automation: some can be accelerated, others expanded, and others still transformed. The boundary between what can be delegated and what is best left to humans is not fixed—it shifts as technology evolves and as we learn to use it more deliberately. For example, we can now delegate, without supervision, the transcription, summarization, and analysis of a sales call, while the creation of content for social media still requires careful human oversight.

The final step is task allocation, which involves making deliberate decisions about how to distribute responsibilities between humans and machines. This involves not only assigning specific tasks to AI, based on its strengths in speed, scale, or pattern recognition, but also determining where human input is essential for judgment, creativity, or ethical considerations. When done well, this balance allows AI to act as a force multiplier, while humans retain control over critical decisions and the overall direction of the process.

Providing Instructions: the Prompt Canvas

If delegation defines what is entrusted to AI, the next phase—providing instructions—determines how the machine will interpret the task. This is where much of the output quality is determined. I’ve written extensively on this topic in the previous issue of Radical Curiosity. Among the various approaches, the framework I’ve chosen to adopt in my own work—and the one I recommend—is the Prompt Canvas, which in my view offers two significant advantages. First, it serves as a design grid, ensuring that no relevant variable is overlooked and transforming a vague instruction into a structured prompt. Second, it educates the user to think systematically.

You can explore the Prompt Canvas in more detail here: Designing Better Prompts: A Practical Introduction to the Prompt Canvas.

Evaluation: The Role of Repeatability

The purpose of the evaluation phase is clear: to ensure that the outputs generated by AI are consistent, repeatable, and reliable. A single good response is not enough—for a system to be truly useful, it must produce stable results over time and across different contexts.

The evaluation process varies depending on the specific tool being used. Personally, I distinguish between three scenarios:

Built-in. I’ve chosen to utilize AI-powered features embedded in a tool—for example, the automatic transcription of calls provided by most videoconferencing platforms. In this case, I have limited control over how the activity is performed, but I must verify that the results remain consistent over time, without unpredictable fluctuations.

Collaborative. In this scenario, I interact with AI via a conversational assistant, which means I must systematically check that the prompts used yield coherent results even when different users—with varying backgrounds and interaction styles—employ them.

Operational. This third scenario involves delegating one or more steps of a workflow to AI. For instance, I might take a customer support ticket and ask one or more agents to classify it, define its priority, and perhaps draft a response to be reviewed. In this case, I need to periodically verify—possibly through automated testing—that the workflow functions correctly, that the prompts behave consistently, and that the AI introduces no failure points.

Evaluation, therefore, is not just a critical review of a single output, but an ongoing practice of quality assurance. In this sense, it resembles the concept of testing in software engineering: its purpose is to ensure that the system meets minimum reliability standards and that humans can trust its stability over time.

Responsibility: The Ethical Boundaries of Collaboration

The AI collaboration cycle concludes with responsibility: AI is not an autonomous agent, but a tool—its contribution must be understood, contextualized, and disclosed.

Responsibility unfolds along two main dimensions. The first concerns the structure of delegation: which AI systems have been chosen, and why? What data and information are being shared with the machine? Have regulations, company policies, and security implications been appropriately considered?

The second dimension is transparency. Clearly, the use of AI is not a peripheral act, but an essential part of the collaboration. It means documenting the AI’s contribution, clarifying where the system played a role and where human intervention was decisive. Only by doing so can trust be preserved—both within organizations and in relation to clients, users, or citizens.

Applying the Cycle of Deliberate Collaboration with AI to Localization

To understand how the Cycle if deliberate collaboration with AI can be applied to a real case, let us take the example of text translation for a large company.

Every translation project begins with a preparation step. The company’s localization team analyzes the content to be translated and also gathers and organizes all the information needed to ensure that the translation reflects the brand’s voice (style guides, terminology glossaries, etc.). Without this preliminary step, there is a risk of producing texts that are linguistically correct but inconsistent with the brand’s identity.

Once the project is set up, the content and instructions are usually handed over to a language service provider (LSP), an agency that coordinates a network of freelance translators. The LSP is responsible for selecting the most suitable professionals, contracting them, compensating them, and supervising their work.

The translator works with tools that simplify and optimize the process. Today, these tools almost always provide a machine pre-translation that must be corrected or validated. In projects that require the highest level of accuracy, a further review is added: a second human translator rereads the text to ensure final quality (because, as the saying goes, four eyes are better than two).

In summary, the process is structured as follows:

Project preparation – handled by the company’s internal team

Assignment to the most suitable translator – managed by the LSP

Translation – carried out by the translator with the support of the LSP

Revision – performed by the translator and supervised by the LSP

Final check and delivery – the responsibility of the LSP

The role of the LSP comes at a significant cost, which can amount to as much as 70% of the fee paid by the end client. As a result, in most cases the translator receives no more than 30% of the rate, with a direct impact on their earning margins and, in many cases, on the quality of the work they are able to deliver.

Imagine stepping into the role of a company’s localization manager, tasked with ensuring that content is translated into multiple languages while making the most of the available budget. In this context, generative artificial intelligence provides several opportunities to streamline the process and to allocate localization resources more strategically.

Project Preparation

At this stage, the main goal is to automate project setup. Since the subsequent phases of translation and revision may be handled by AI, it is essential to ensure that instructions and supporting materials are provided in a format optimized for use by an LLM.

Within an AI-assisted workflow, we can envision the creation of several specialized agents:

An agent to analyze the content to be translated and retrieve from the company’s knowledge base all materials needed to provide adequate context. This could also be achieved using RAG (Retrieval-Augmented Generation) and a knowledge graph to enhance the search.

an agent to automatically select previously translated and human-approved content of the same type, creating a dataset of examples aligned with the required style;

an agent to identify a subset of glossary terms to be enforced.

All AI-delegated activities should be supervised by a human project manager, who validates the accuracy of the generated instructions and, if needed, reviews the supporting materials.

Assignment to the most suitable translator

For the localization team, this is the stage where the use of AI has little value, for two main reasons:

many companies rely on one or more LSP to manage their network of translators. This means they do not have to handle it directly, although it significantly increases the overall cost of translation;

when companies choose to manage translators internally, they typically work with a stable group of professionals already familiar with the company, its products, and its communication style. As a result, there is no need to constantly recruit new resources.

In both cases, no sophisticated tools are required: it is sufficient to manage one or two LSPs or directly coordinate a few dozen trusted translators. In other words, no delegation to AI.

Translation and Revision

The use of artificial intelligence for translation is not new: it has been employed for years with increasingly effective results. Today, the most common practice is to limit human involvement to the review stage, assuming that AI can already produce translations accurate enough to require only minor corrections (this process is called Machine Translation Post Edit or MTPE).

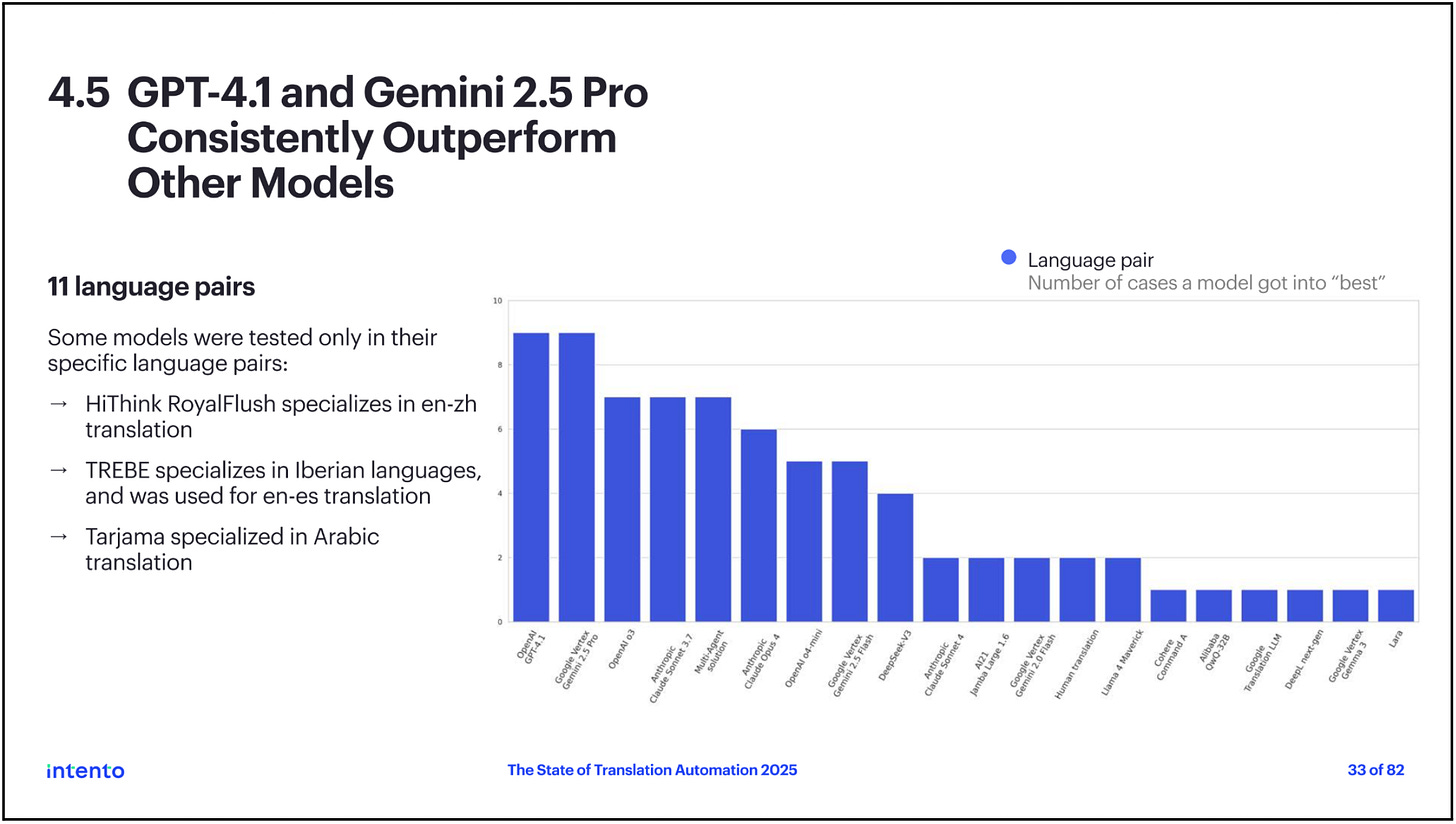

With the emergence of large language models, the industry began to ask whether their intrinsic linguistic capabilities could enable them to outperform traditional systems. The short answer appears to be yes. According to a very recent report by Inten.to (The State of Translation Automation 2025), across all benchmarks the models developed by OpenAI and Anthropic outperform both engines based on the older Neural Machine Translation (NMT) technology and specialized LLMs such as Transalted Lara and DeepL next-gen.

In this context, the localization manager of a large company should move beyond vendors promising “magic solutions” (as virtually every platform and LSP does today) and instead recognize that adopting next-generation LLMs opens up an entirely different path. These models are not only far more flexible than systems designed solely for translation, but they also make it possible to bypass traditional translation management systems (TMS) and LSPs. The result is greater control, reduced dependency on external providers, and the ability to adapt more quickly in an industry where innovation advances at lightning speed.

The translation process, however, needs to be rethought. A full discussion of this topic goes beyond the scope of this article, so I will limit myself to a couple of examples, starting from the observation that the linguistic flexibility of an LLM allows it to process instructions and imitate style with considerable effectiveness. In the translation phase, we could therefore imagine several AI agents working together:

a first agent that takes as input the materials generated during the project preparation phase and uses them as context to produce a draft translation;

a second agent that performs purely linguistic quality checks, applying a traditional evaluation model such as MQM (Multidimensional Quality Metrics, the industry standard framework for assessing translation errors and quality dimensions), or a new framework designed specifically for LLMs;

a third agent that ensures compliance with company rules and regulations in the target market;

and so forth.

It is clear that the role of the translator is bound to evolve, and companies will need linguists capable of instructing and supervising artificial intelligence. They will face a choice: either manage this activity in-house or delegate it to an external provider who, in turn, will oversee the AI delegation process while keeping it as a “black box.” But is such opacity desirable if localization is a strategic asset of the company and one of the key drivers of competition in the market?

Final Check and Delivery

If you have followed the reasoning so far, you will also see that in this phase it is possible to introduce additional agents responsible for carrying out further quality checks or adapting the output to the required final format.

***

Ultimately, the Cycle of Deliberate Collaboration with AI allows us to move beyond a purely mechanical approach, where artificial intelligence is used simply to eliminate translators and cut costs, toward a scenario where AI has the potential to enhance the entire localization process.

The same reasoning can be applied to any industry and any business function.

Curated Curiosity

How Americans View AI and Its Impact on People and Society

In the United States, the adoption of artificial intelligence is met with a clear boundary, defined mainly by the context in which it is applied. According to a survey by the Pew Research Center, the majority of citizens support the use of AI in areas where technical or analytical tasks prevail: 74% approve of it for weather forecasting, 70% for detecting fraud in public assistance, 66% for speeding up drug development, and 61% for helping identify criminal suspects.

In these domains, technology is seen as an ally that enhances operational efficiency without challenging the human role in decision-making. The perspective shifts significantly when AI enters spheres governed by personal values or human relationships. 66% of respondents reject the use of AI to assess romantic relationships, and 73% rule it out entirely in matters of faith or spirituality. In such contexts, technological mediation is perceived as an intrusion that undermines the authenticity of the human experience.

Pew Research Center, How Americans View AI and Its Impact on People and Society

Two Books, One Strategic Ecosystem

In a three-year update, Tiago Forte shares the overall results of his publishing efforts: Building a Second Brain and The PARA Method together have generated a consistent revenue stream and played a key role in driving his broader ecosystem of products and customers.

The most interesting part of his analysis is the comparison between traditional publishing and self-publishing. Forte explores how, with the same sales numbers, self-publishing could have yielded significantly higher royalties, albeit at the expense of assuming additional marketing, distribution, and risk.

Tiago Forte, 3-Year Update: A Financial Analysis of My Book’s Unit Economics