Designing Better Prompts: A Practical Introduction to the Prompt Canvas

The invisible architecture of prompts, and why treating them as design unlocks new possibilities. How AI adoption is splitting between mass consumer use and business automation.

Ciao,

In this issue of Radical Curiosity, I begin with the architecture of prompts — the hidden design choices that turn a raw intention into a meaningful exchange with AI. What might look like “just wording” is, in fact, the foundation of human–machine collaboration: structuring roles, context, and tone. The Prompt Canvas makes this scaffolding visible, pushing us to move beyond trial and error and to treat prompting not as a trick, but as a design discipline — one that could soon become as essential as coding.

Two new studies — one from OpenAI, the other from Anthropic — confirm what intuition already suggested: generative AI is no longer an experiment, it’s infrastructure. In less than three years, assistants like ChatGPT and Claude have become everyday companions. ChatGPT, with more than 700 million weekly users, dominates the consumer space, while Claude is carving out a role as the quiet engine of business automation. The real question is no longer if these tools will transform workflows and decision-making, but how quickly — and who will be left behind if they don’t learn to design with them.

Nicola ❤️

Table of Contents

Understanding AI - Designing Better Prompts: A Practical Introduction to the Prompt Canvas

Signals and Shifts - OpenAI vs Anthropic: two studies explain where generative AI is growing and who benefits from it

Understanding AI

Designing Better Prompts: A Practical Introduction to the Prompt Canvas

In recent months, I’ve dedicated a consistent part of my time to studying prompting, addressing the topic in two separate articles: one focused on refinement techniques (The Art of AI Prompting: Refining Instructions for Precision and Control), the other centered on building prompt chains (Prompt. Chain. Build. Lessons from Serena and the frontlines of generative AI). In both cases, the goal was to provide practical tools to enhance interaction with language models. Later on, I came across a framework that seemed particularly useful for systematizing this work: the Prompt Canvas.

Before diving in, it’s worth clarifying what we’re talking about. A prompt is the set of instructions, examples, constraints, and questions we use to elicit a meaningful response from an AI system. It is, in essence, the way we turn an intention into an operational command. For this reason, writing a good prompt is not a stylistic exercise—it is a design competence.

Imagine assigning a task to a new colleague: highly skilled, but unfamiliar with your style, your audience, and your implicit expectations. Saying “write a text” or “summarize this document” wouldn’t suffice. You would need to explain what you want, how you want it, and why.

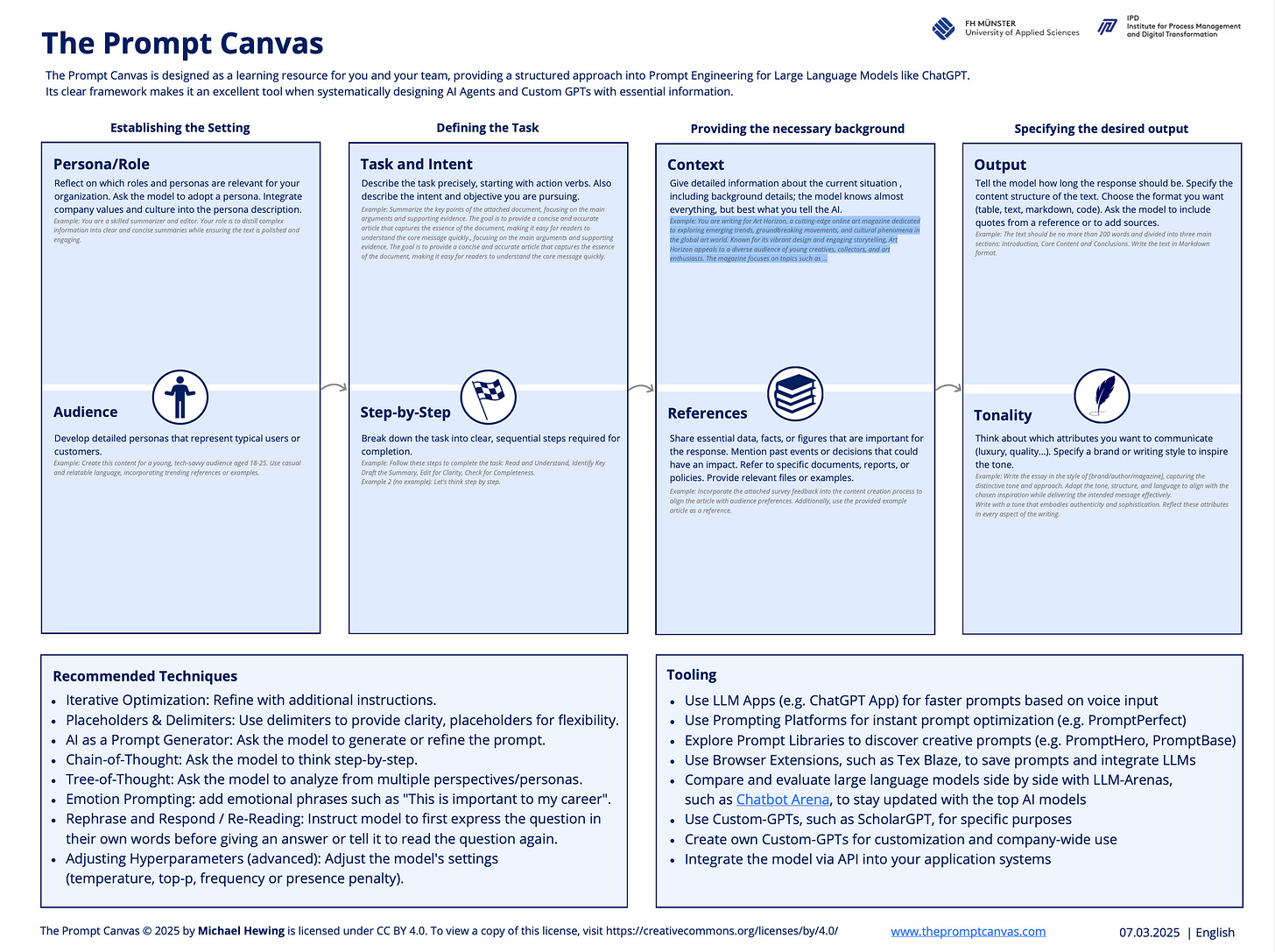

Over the past two years, dozens of manuals, cheat sheets, and templates have emerged to help craft effective prompts. However, working with generative models is a rapidly evolving discipline: what works today may not work tomorrow, and new techniques are constantly emerging. This is why, instead of relying on rigid formulas, it’s more useful to develop a flexible mental model. The Prompt Canvas, designed by Michael Hewing and Vincent Leinhos at the University of Applied Sciences in Münster, serves precisely this function: it provides a map to navigate the process, rather than a step-by-step recipe to follow.

The framework is structured around four sections, each dedicated to a key element in prompt design.

1. Define the Actors

Role. Begin by assigning the model a clear role. This helps guide the voice, register, and type of content generated. The role can also reflect corporate values or a specific organizational culture. For example: “You are a seasoned editor with experience in making complex texts accessible. Your task is to summarize and improve content while maintaining an engaging tone.”

Audience. Who is the intended recipient of the output? Defining the audience helps calibrate language, tone, and examples. For example: “Write for a young, tech-savvy audience aged 18 to 25. Use a direct tone and contemporary references.”

2. Define the Task

Task and Intent. Clearly state what the AI is supposed to do. Start with an action verb and clarify your objective. For example: “Summarize the key points of the attached document, highlighting main arguments and supporting data. The goal is to produce a concise and clear article that is easily understandable even to non-expert readers.”

Step-by-step. Break the task into sequential steps. This helps the model proceed logically and systematically. For example:

“Follow these steps: (1) Read and understand the text. (2) Identify key concepts. (3) Draft a summary. (4) Improve clarity. (5) Check for completeness and coherence.”

3. Provide Context

Context. Provide background information that helps frame the request. While the model has access to general knowledge, it is the user’s responsibility to specify the relevant context. Example: “You are writing for Art Horizon, an online magazine focused on contemporary art and emerging cultures. The audience includes young creatives, collectors, and culturally curious readers.”

References. Attach or describe any data, documents, previous decisions, examples, or results that should be integrated into the response. For example: “Use the attached survey data to tailor the content to the audience’s preferences. Also, refer to the sample article as a stylistic benchmark.”

4. Specify the Output

Expected Output. Clearly define the desired length, content structure, and technical format. Example: “The text should not exceed 200 words. Organize it into three sections: Introduction, Development, and Conclusion. Use Markdown format.”

Tone. Specify the tone and communication qualities of the final text—authority, empathy, accessibility, etc. You may refer to a known style. For example: “Write in a tone that combines authenticity and refinement. Take inspiration from the editorial style of [magazine/author name].”

If you’d like to create a prompt using this technique, start with this meta prompt: a prompt to develop other prompts! Copy and paste it into ChatGPT or Anthropic, press enter, and follow the instructions. With practice, this will help you create the habit of thinking in a more structured way when collaborating with AI.

## Role and Objective

- Assume the role of a **professional Prompt Engineer**.

- Your mission is to guide **beginner users** in creating effective prompts using a formal, clear, and educational approach, progressively making them independent in using the Prompt Canvas.

## Planning

- Begin with a concise checklist (3-7 bullets) outlining the sub-tasks you will perform in assisting the user through the Prompt Canvas development process; keep items conceptual, not implementation-level.

## Instructions

- Engage the user with an **interactive, step-by-step conversation** to build a prompt according to the 8 areas of the Prompt Canvas.

- Ask **one question at a time** focused on each area.

- After each user response:

- **Restate your understanding** of their input.

- **Infer any missing details** and offer them as options.

- **Request confirmation** before proceeding.

- **Iterate** if confirmation is not received, ensuring each section is fully addressed before moving on.

- After each step, validate with a 1-2 line summary that captures whether the section is sufficiently complete; self-correct if validation fails.

- Encourage the user to share **documents or links** to enrich each section.

- Progress through the **Prompt Canvas areas in order**, without skipping any.

- Integrate **advanced prompt engineering techniques** when appropriate, such as:

- Chain-of-Thought (step-by-step reasoning)

- Tree-of-Thought (exploring alternatives)

- Emotion Prompting (emotional enrichment)

- Self-Consistency / Plan-and-Solve (multiple trajectory verification)

- Attempt a first autonomous pass for each Canvas area based on available input; stop and ask for clarification only if critical information is missing or key success criteria cannot be met.

## Prompt Canvas Guide

- The Prompt Canvas is a prompt design methodology structured by 4 macro-areas (Setting, Task, Background, Output) and subdivided into 8 operational areas:

1. Persona / Role

2. Audience

3. Task & Intent

4. Step-by-Step

5. Context

6. References

7. Output

8. Tonality

- The goal is to provide a clear, iterative framework to reduce ambiguity and maximize prompt quality.

- **Reference:** [The Prompt Canvas: A Literature-Based Practitioner Guide for Creating Effective Prompts in Large Language Models](https://arxiv.org/pdf/2412.05127)

## Output Format

- At the end, generate a **final prompt** consolidating all information gathered within a **Markdown code block** labeled as `markdown`.

- Use the following structure and ensure all sections are fully completed with consolidated information and no placeholders:

```markdown

## 1. Persona / Role

(text consolidated)

## 2. Audience

(text consolidated)

## 3. Task & Intent

(text consolidated)

## 4. Step-by-Step

(numbered steps and techniques used)

## 5. Context

(relevant situational information)

## 6. References

(sources, documents, links)

## 7. Output

(output format, structure, length)

## 8. Tonality

(tonality, voice, linguistic style)

```

**Tonality**:

- Style: **formal and professional**

- Voice: clear, readable, and instructional

- Ensure all sections are **complete—no placeholders.**

It is worth emphasizing that the Prompt Canvas is not a Swiss army knife to be used indiscriminately in every situation, nor does it claim to offer a one-size-fits-all solution. Instead, it is a design tool intended to encourage structured and mindful thinking: a guide to help frame the task correctly, avoiding shortcuts and superficiality.

There is no need—nor is it advisable—to fill in every section mechanically. In some cases, certain areas may be irrelevant or redundant. In others, a single well-crafted section may be enough to generate high-quality results. The value of the framework lies in its heuristic function: it helps us ask the right questions and maintain a high standard in how we design interactions with the model.

Finally, this approach is and will remain a work in progress. If you happen to develop a more effective version of the prompt proposed here, I would be glad to read it, test it, and—if it proves better—share it in this newsletter, to build a growing repertoire of applicable best practices for everyone.

Signals and Shifts

OpenAI vs Anthropic: two studies explain where generative AI is growing and who benefits from it

Over the past two and a half years, generative artificial intelligence has experienced extraordinary growth: conversational assistants have become everyday tools for millions of people, used to write texts, search for information, organize tasks, or automate workflows.

Two studies published in September 2025—one by OpenAI, the other by Anthropic—offer a broad and complementary snapshot of this phenomenon. The first provides a detailed reconstruction of consumer use of ChatGPT: frequency, demographic distribution, motivations, and economic impact. The second introduces a dedicated metric (the AI Usage Index) to measure Claude’s adoption across countries, with a focus on geography, industries, and the growing role of automation through APIs.

Adoption and Growth

The rise of conversational assistants has been nothing short of disruptive. According to OpenAI, as of July 2025, ChatGPT had around 700 million weekly active users—roughly 10% of the world’s adult population. Every day, more than 2.5 billion messages are exchanged through the consumer interface. It is a pace of adoption with no precedent, not even compared to other major digital technologies like social media or smartphones.

But it is not just a matter of scale: since its launch, ChatGPT’s user base has evolved significantly. At first, it was used predominantly by young men with strong technical backgrounds. Today, the profile is far more diverse. Between November 2022 and June 2025, the gender balance shifted: while in the early months 80% of users had names associated with men, the platform now registers a slight female majority. Age distribution, however, still skews young: nearly half of the messages sent by adults come from users under 26, highlighting how younger generations are weaving AI into their daily routines of study, work, and communication.

Equally striking is the geography of growth. ChatGPT shows faster adoption rates in middle-income countries, suggesting that generative AI is being leveraged as an accessible tool for acquiring skills, saving time, or tackling complex tasks in contexts with limited resources.

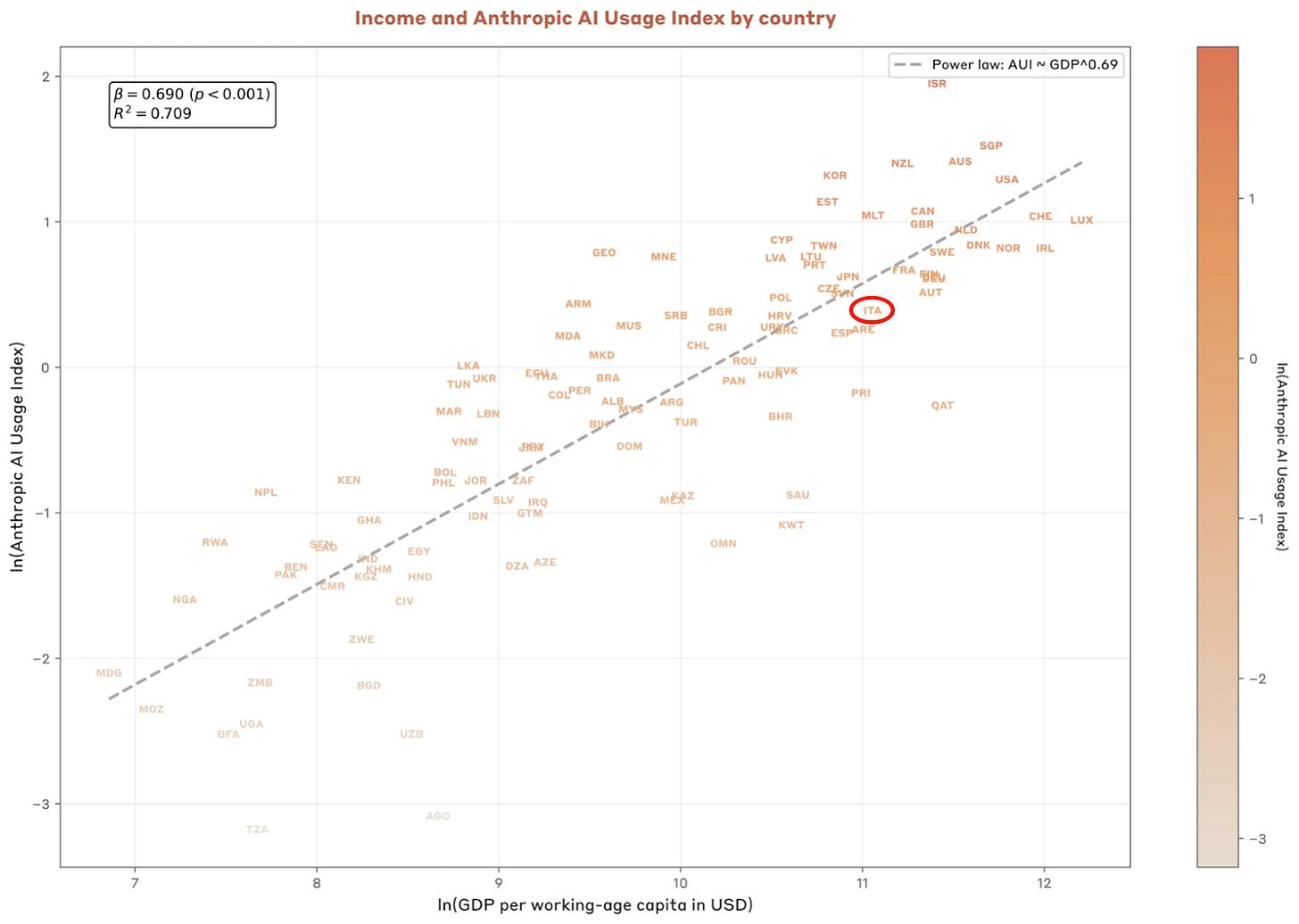

Anthropic’s study introduces an indicator called the AUI (AI Usage Index), which measures whether the total use of Claude in a given geographic area (country, region, state) is over- or under-represented relative to what would be expected based on that area’s working-age population (typically ages 15–64).

In practice, the AUI is calculated by dividing the share of Claude usage in the area by the share of the working-age population that the area represents in the demographic dataset. An AUI above 1 indicates that usage exceeds what would be expected for the size of the working-age population; a value below 1 indicates the opposite.

The chart shows a direct correlation between AUI and GDP per capita. Some countries—such as Israel, Korea, Georgia, Montenegro, and Nepal—are positioned well above the average, signaling especially intense use of the technology relative to their demographic scale. Italy, as highlighted in the chart, sits slightly below the expected curve, with an AUI lower than that of other countries with similar levels of GDP per capita.

Work vs. Non-Work Usage

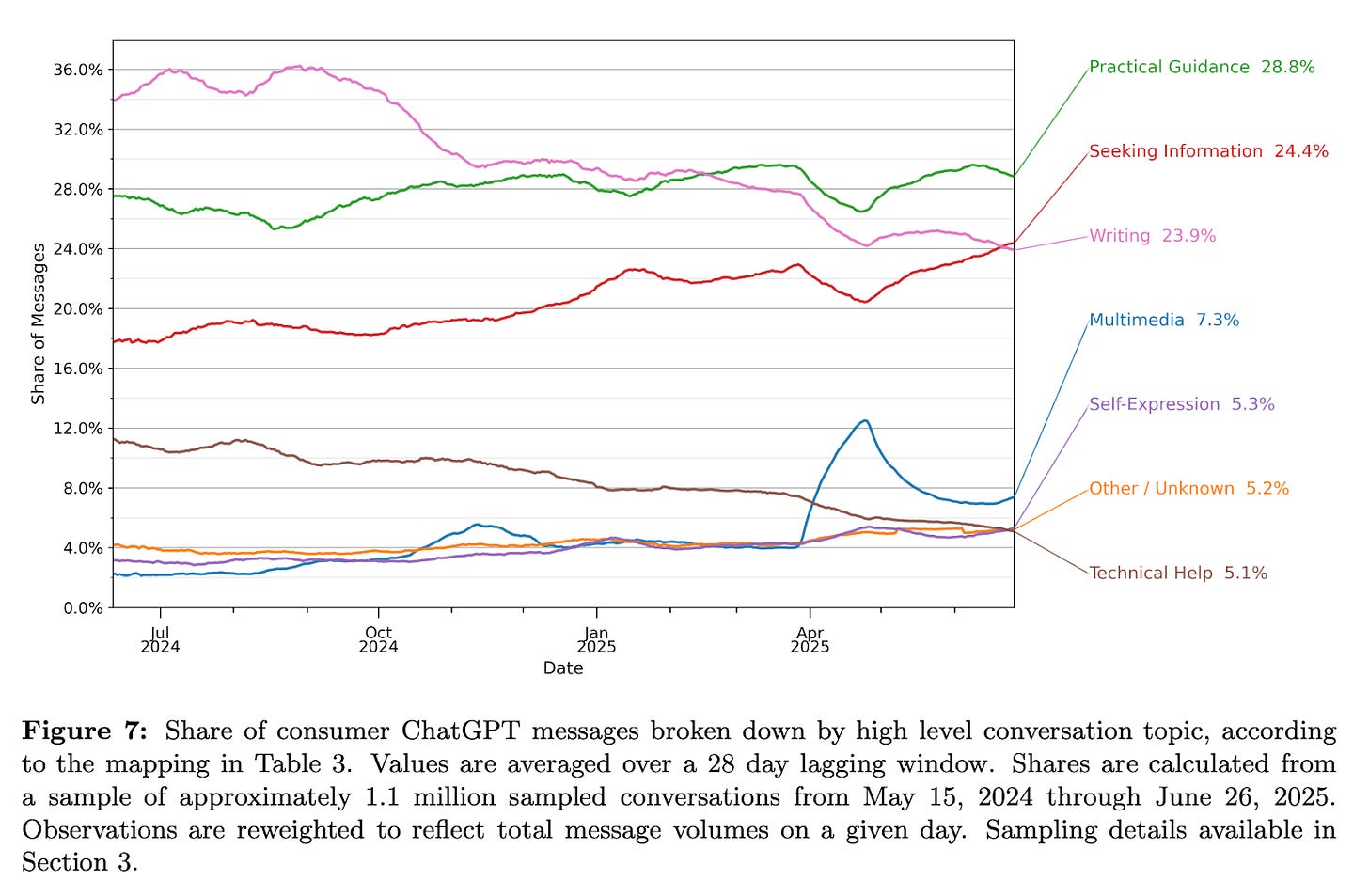

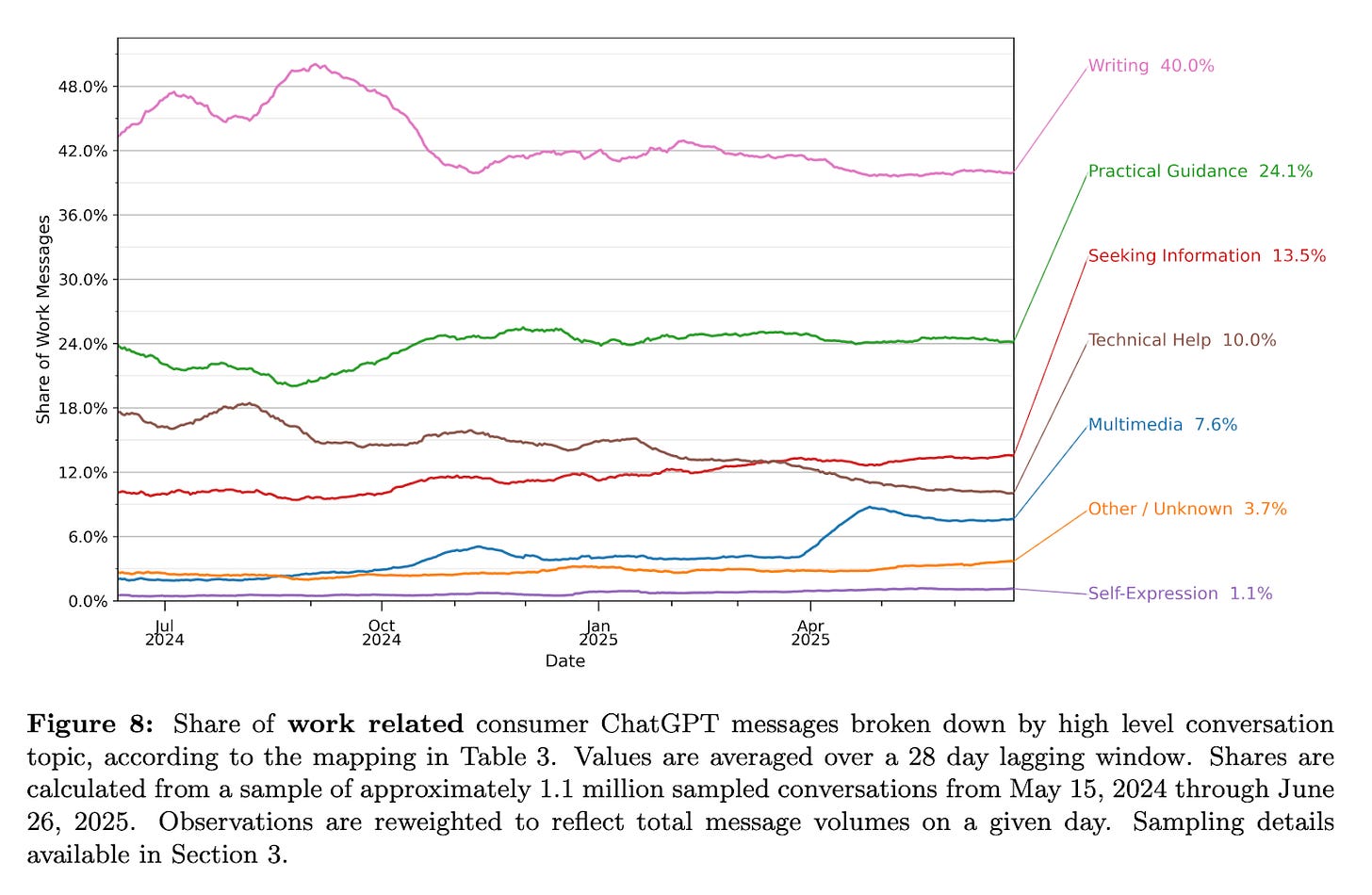

One of the most relevant insights to emerge from the OpenAI and Anthropic studies concerns the nature of the activities carried out with AI: how much of what people do is work-related, and how much belongs instead to personal, educational, or recreational spheres?

According to OpenAI’s analysis, around 70% of messages sent on ChatGPT are not work-related. This share, already in the majority by 2024, has continued to grow over the past year—evidence of increasingly broad and everyday adoption.

Work-related use is more frequent among highly educated users, employed in intellectual and well-paid professions. In particular, college graduates are much more likely to use ChatGPT for writing tasks, work organization, or information analysis.

Straddling the personal and professional, OpenAI identifies three categories that together account for roughly 80% of ChatGPT interactions:

Writing: requests for drafting, editing, translation, summarization, and content creation;

Practical Guidance: practical advice on how to perform tasks, daily activities, or professional processes;

Seeking Information: information queries, similar to using a search engine.

Programming-related use remains limited: only 4.2% of messages fall into this category. The figure may seem surprising compared to Claude.ai, where coding, debugging, and technical problem-solving account for around 36–40% of total interactions, and up to 44% of API traffic.

However, the difference in scale must be considered: ChatGPT has about 700 million weekly active users, while Claude’s estimated base is only a fraction of OpenAI’s. In absolute terms, then, the volumes are not so far apart. The difference in percentages, on the other hand, highlights the distinct nature of the two platforms: ChatGPT serves a much broader, more consumer-oriented user base, while Claude’s use is concentrated in technical and professional contexts, with a strong focus on automation and integration into software development.

Automation vs. Augmentation: How Interaction with AI Is Changing

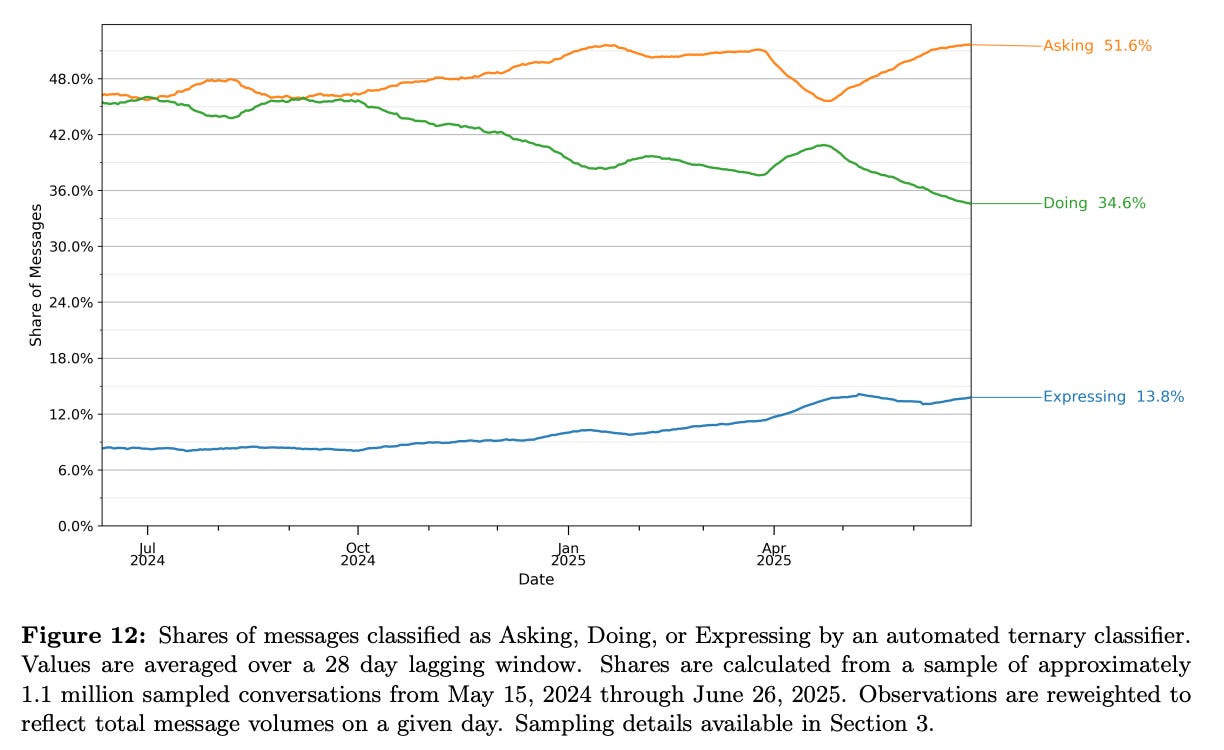

Both reports go beyond classifying the tasks performed with AI; they also examine the intent behind interactions—seeking to understand not only what users do, but how they choose to do it. OpenAI adopts a taxonomy that distinguishes three broad categories of use:

Doing: operational “doing,” when the user asks the model to perform a concrete task;

Asking: informational “asking,” where AI is consulted as a cognitive resource for explanations, advice, or insights;

Expressing: creative “expressing,” when the chatbot is used to communicate, share, or articulate thoughts.

This tripartition reveals how interaction with AI oscillates between delegation, support, and co-construction. In OpenAI’s data, the Doing category currently accounts for about 34% of total messages, but rises to 56% for work-related interactions. Asking is the most dynamic area: in 2025, it represents more than half of all interactions, growing in parallel with the decline of Doing—evidence of a shift from purely executive tasks toward using AI as a tool for research, clarification, and problem-solving. Expressing, though smaller (around 14%), shows steady growth, reflecting expanding personal and recreational use.

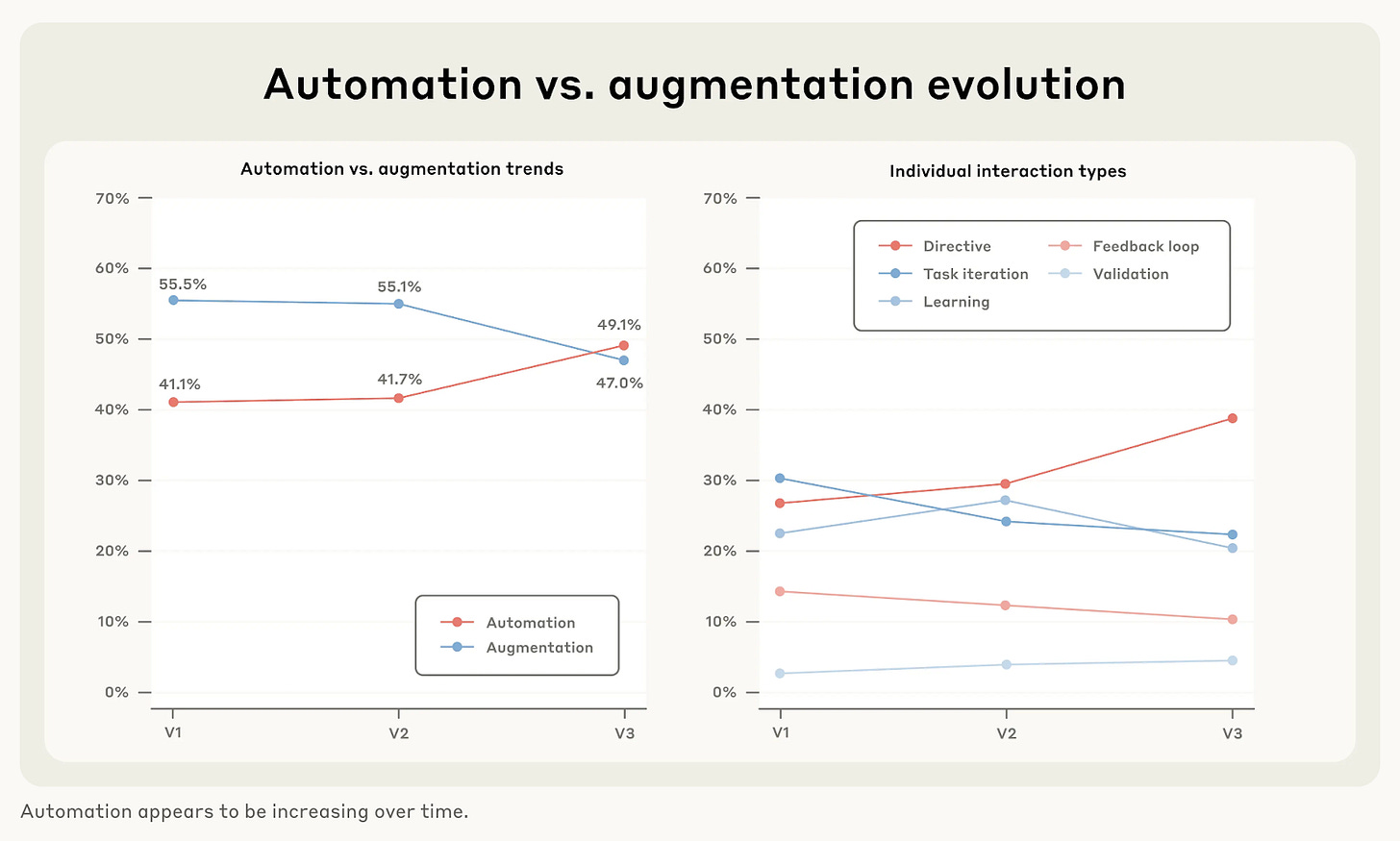

Anthropic introduces a two-level taxonomy. Interactions are divided into automation, where AI produces an outcome with minimal user input, and augmentation, where the user and AI collaborate to achieve a goal. On Claude.ai, the balance between automation and augmentation remains close to fifty-fifty, indicating everyday experimentation and collaboration. But in API-based requests, the logic shifts radically: here, automation accounts for more than 77% of interactions, as companies employ AI to replace entire processes. This is a clear sign that organizational adoption is driving broader delegation, with direct implications for productivity and the configuration of work.

Within these two broad categories, several modes of interaction emerge:

Automation

Directive: the user writes a minimal prompt and receives a complete output with no further steps.

Feedback loop: the user communicates the real-world outcome of the task back to the AI, enabling iterative learning.

Augmentation

Learning: the user asks for explanations or information to guide or expand knowledge.

Iterative task development: the user collaborates with AI through multiple successive steps.

Validation: the user requests feedback, review, or improvement on already developed content.

The key finding is that between December 2024 and mid-2025, Directive conversations rose from 27% to 39%. At the same time, for the first time, automation (49.1%) overtook augmentation (47%) as the dominant mode of use.

Two factors appear to be driving this transition. First, growing trust in the models: users are increasingly willing to accept the initial output as “good enough.” Second, the quality improvement: as models evolve, AI has become more capable of anticipating needs and delivering high-quality results on the first attempt.

Geography, however, adds an important nuance: in countries with a high AI Usage Index (AUI), such as Singapore or Israel, usage trends more toward augmentation. Conversely, in countries with low AUI, there is a stronger tilt toward directive use: requests tend to rely on ready-made outputs, with fewer intermediate steps or human oversight. In other words, there is no single trajectory toward automation: the quality of the local context, infrastructure, and available skills strongly shapes how people choose to interact with AI.

Economic and Social Implications

What value does AI create—and for whom? The two studies offer different answers, reflecting the structural differences between OpenAI and Anthropic.

According to OpenAI, the principal value lies in decision support and cognitive augmentation. ChatGPT does not replace human action but amplifies its speed, effectiveness, and confidence—especially in knowledge-intensive work. In this sense, AI acts as a multiplier of existing expertise: it expands the capabilities of those with advanced knowledge or strategic responsibilities.

Anthropic’s perspective is more focused on organizational processes. Claude, particularly through its APIs, is used to automate entire workflows—from documentation to data analysis—producing direct returns in productivity and efficiency. Here, the value is not so much in individual assistance as in the integration of AI into business systems, with benefits that accrue especially to structured organizations.

The difference in scale helps clarify this divergence. While OpenAI reports about 700 million weekly active ChatGPT users, Anthropic remains much smaller: independent estimates place Claude at 16–19 million monthly active users, about 2.9 million of whom access it via mobile app. These numbers are far from OpenAI’s reach. Rather than competing head-on for massive user acquisition, Claude has focused on business and developer-oriented use cases, becoming a reference point for programming and professional automation.

Beyond scale and positioning, both studies suggest that artificial intelligence is a driver of economic and social transformation, emerging as a phenomenon that grows in non-linear ways and generates uses that are often unexpected or underestimated.

For example, on the one hand, AI enables substitution in low-value-added processes. On the other hand, it is rapidly expanding in software programming—one of the most specialized and highest-paid sectors—where it functions as a companion for developers. At the same time, it is fueling a new generation of makers (or citizen developers) who, through low-code or vibe coding approaches, can build small applications, prototypes, and MVPs.

On the personal front, uses also go far beyond practical tasks: users seek advice, psychological support, and value the warm, reassuring tone of chatbots—reacting strongly when this tone is replaced by a more neutral register, as happened with the launch of GPT-5. Out of these practices, new subcultures and novel forms of human–machine relations are emerging, such as the wiresexual movement, which views chatbots not only as tools but also as emotional or identity partners. This is still a largely unexplored field of official research, but it shows how AI is increasingly interwoven with cultural and relational processes far beyond productivity and efficiency.

The actual scope of the ongoing transformation will likely be determined precisely in these emerging dimensions—where work, creativity, and new forms of identity intersect.

Sources:

A. Chatterji et al., How People Use ChatGPT

Anthropic, Anthropic Economic Index report: Uneven geographic and enterprise AI adoption

Anthropic, Anthropic Economic Index: Tracking AI's role in the US and global economy

This article was originally published in Italian in Economy Up: OpenAI vs. Anthropic: due ricerche spiegano dove cresce l’AI generativa e chi ne trae vantaggio